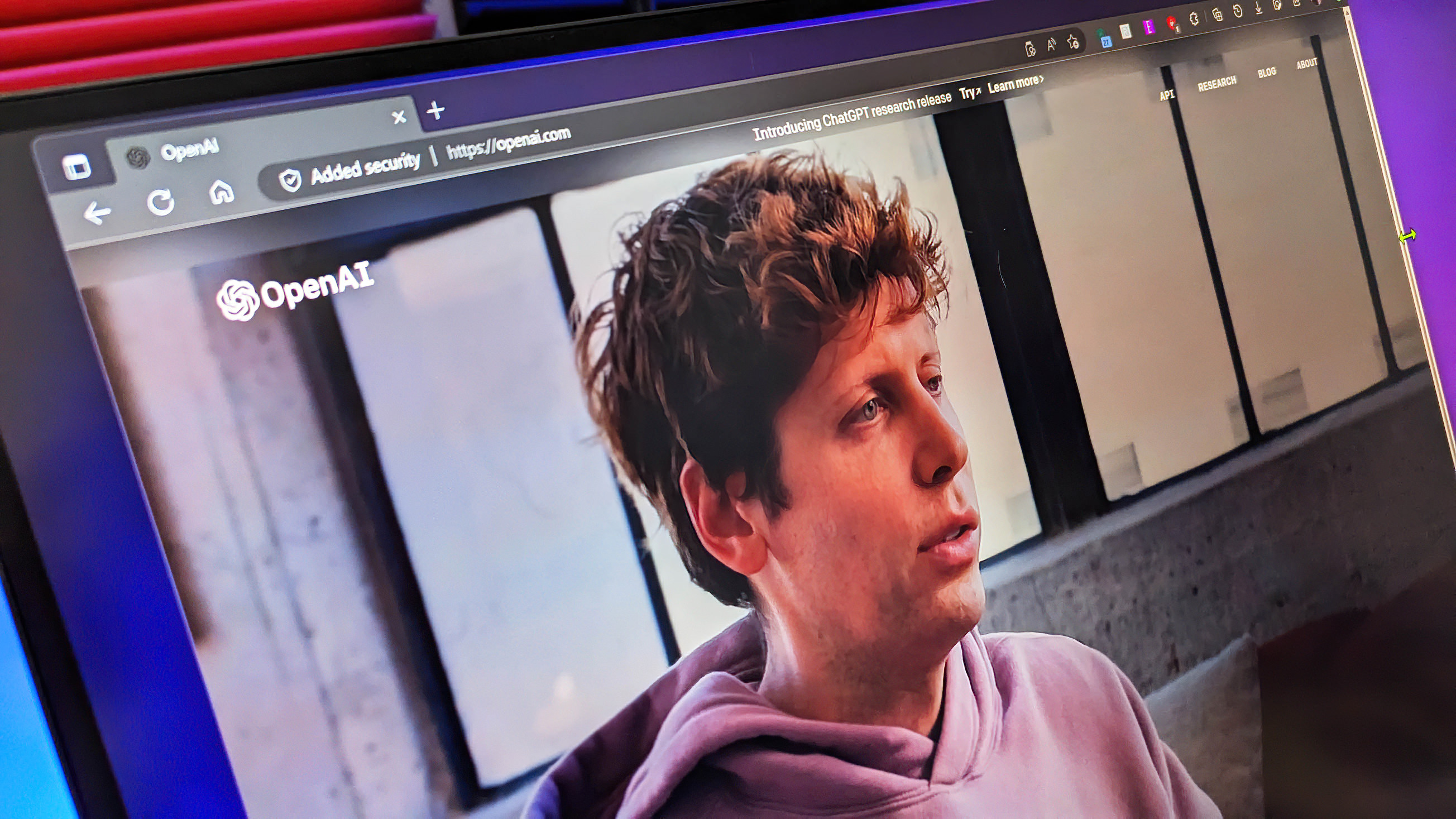

"We made a mistake in not being more transparent": OpenAI secretly accessed benchmark data, raising questions about the AI model's supposedly "high scores" — after Sam Altman touted it as "very good"

OpenAI's o3 AI model was reportedly trained using a sophisticated benchmark's problems and solutions, potentially explaining its exemplary performance.

In the next couple of weeks, OpenAI is slated to unveil o3 mini, the smaller version of its o3 series with advanced reasoning capabilities across math, science, and coding. CEO Sam Altman claims the model is "very good," potentially beating the performance of the ChatGPT maker's o1 series. The company also announced that it would launch the application programming interface (API) and ChatGPT for the model simultaneously.

While details about the AI model remain slim, reports suggest OpenAI secretly funded and accessed the FrontierMath benchmarking data, raising concerns about whether the company used the data to train o3 (via Search Engine Journal). The AI model received high scores across a wide range of benchmarks, but if the rising concerns are anything to go by, the shared results might be a fabrication of the model's reality.

Perhaps more concerning, OpenAI secretly funded the development of FrontierMath, keeping the mathematicians involved in the dark. Epoch AI reportedly disclosed the details of the secret funding to the mathematicians in the final paper featuring the benchmark published on Arxiv.org.

As recently highlighted in Reddit's r/singularity subreddit:

"Frontier Math, the recent cutting-edge math benchmark, is funded by OpenAI. OpenAI allegedly has access to the problems and solutions. This is disappointing because the benchmark was sold to the public as a means to evaluate frontier models, with support from renowned mathematicians. In reality, Epoch AI is building datasets for OpenAI. They never disclosed any ties with OpenAI before."

To this end, the validity of the FrontierMath project is in question. It's supposed to be used to test AI models, but if OpenAI already had the questions and answers, it beats the point. It cannot be used as a standard to gauge the reasoning and output of other models, as they'll be at a competitive disadvantage.

According to a post on Meemi's Shortform about the FrontierMath project and OpenAI's involvement:

Get the Windows Central Newsletter

All the latest news, reviews, and guides for Windows and Xbox diehards.

"The mathematicians creating the problems for FrontierMath were not (actively) communicated to about funding from OpenAI. The contractors were instructed to be secure about the exercises and their solutions, including not using Overleaf or Colab or emailing about the problems, and signing NDAs, "to ensure the questions remain confidential" and to avoid leakage. The contractors were also not communicated to about OpenAI funding on December 20th. I believe there were named authors of the paper that had no idea about OpenAI funding."

Interestingly, Epoch AI's Associate Director Tamay Besiroglu seemingly corroborated the highlighted details above but claimed there was a "holdout," potentially indicating that OpenAI didn't have unlimited access to FrontierMath's dataset:

"Regarding training usage: We acknowledge that OpenAI does have access to a large fraction of FrontierMath problems and solutions, with the exception of a unseen-by-OpenAI hold-out set that enables us to independently verify model capabilities. However, we have a verbal agreement that these materials will not be used in model training.

OpenAI has also been fully supportive of our decision to maintain a separate, unseen holdout set—an extra safeguard to prevent overfitting and ensure accurate progress measurement. From day one, FrontierMath was conceived and presented as an evaluation tool, and we believe these arrangements reflect that purpose."

On the other hand, Epoch AI's lead mathematician Elliot Glazer seems to be of a different opinion citing "OAI’s score is legit (i.e., they didn’t train on the dataset), and that they have no incentive to lie about internal benchmarking performances." Epoch AI is developing a hold-out dataset to test OpenAI's o3 model, as it won't have access to the problems or solutions. "However, we can’t vouch for them until our independent evaluation is complete," added Glazer.

Kevin Okemwa is a seasoned tech journalist based in Nairobi, Kenya with lots of experience covering the latest trends and developments in the industry at Windows Central. With a passion for innovation and a keen eye for detail, he has written for leading publications such as OnMSFT, MakeUseOf, and Windows Report, providing insightful analysis and breaking news on everything revolving around the Microsoft ecosystem. While AFK and not busy following the ever-emerging trends in tech, you can find him exploring the world or listening to music.