OpenAI forms a new safety team led by CEO Sam Altman and announces it’s testing a new AI model (maybe GPT-5)

OpenAI's new safety team will ensure its advances meet critical security standards.

What you need to know

- OpenAI has a new safety team after its superalignment team was disbanded.

- The new team will focus on ensuring OpenAI's technological advances meet critical safety and security standards.

- OpenAI announced that a new AI model is in the testing phase but didn't categorically indicate which model or when it will ship to broad availability.

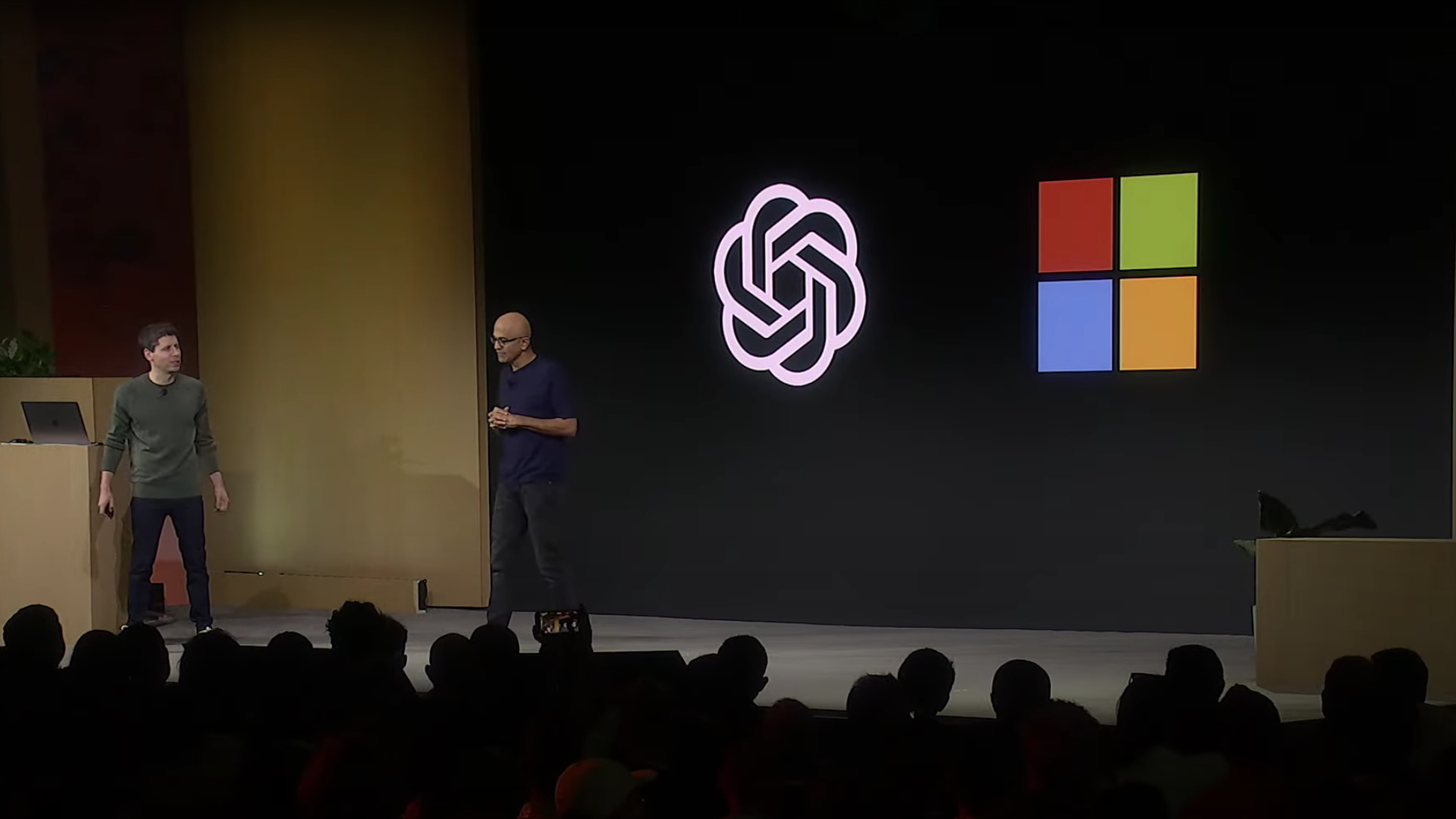

OpenAI seemingly dissolved its superalignment team after multiple members departed for several reasons, including the firm prioritizing "shiny products" over safety measures. However, the company has formed a new safety team with CEO Sam Altman at the helm alongside Adam D'Angelo and Nicole Seligman (who also serve as OpenAI board members).

The safety team's mandate will ensure that OpenAI's technological advances in the AI landscape meet critical safety and security standards. The team has been tasked with " evaluating and further developing OpenAI's processes and safeguards."

Consequently, the team will present its discoveries to OpenAI's board. After analyzing the findings, the board will make its deductions and highlight how best to implement the safety recommendations by the safety team.

The ChatGPT maker also confirmed that it's in the testing phase of a new AI model. However, the company didn't indicate whether it's the "really good, like materially better" GPT-5 model (if that's what it'll be called).

Prioritizing safety over shiny products?

Multiple top executives left OpenAI shortly after launching its new flagship GPT-4o model with reasoning capabilities. Most employees who left the hot startup were part of the firm's super alignment team, including Jan Leike, who led the alignment department.

Leike indicated he'd joined the firm because he thought it was the best place in the world to research "how to steer and control AI systems smarter than us." However, Leike constantly disagreed with top executives over core priorities for next-gen models, including security, monitoring, preparedness, safety, adversarial robustness, (super)alignment, confidentiality, societal impact, and more.

According to the former head of alignment, it was difficult for OpenAI to address some of the issues raised, making him feel like the company wasn't on the right path. Instead, the company seemed more focused on "shiny products," while safety culture and processes had taken a back seat.

Get the Windows Central Newsletter

All the latest news, reviews, and guides for Windows and Xbox diehards.

I'm excited to join @AnthropicAI to continue the superalignment mission!My new team will work on scalable oversight, weak-to-strong generalization, and automated alignment research.If you're interested in joining, my dms are open.May 28, 2024

Elsewhere, former head of alignment at OpenAI Jan Leike recently announced that he'd joined Amazon's Anthropic AI, where he plans to continue his superalignment mission. Leike will specifically focus on work on "scalable oversight, weak-to-strong generalization, and automated alignment research."

Kevin Okemwa is a seasoned tech journalist based in Nairobi, Kenya with lots of experience covering the latest trends and developments in the industry at Windows Central. With a passion for innovation and a keen eye for detail, he has written for leading publications such as OnMSFT, MakeUseOf, and Windows Report, providing insightful analysis and breaking news on everything revolving around the Microsoft ecosystem. While AFK and not busy following the ever-emerging trends in tech, you can find him exploring the world or listening to music.