Microsoft wants to make AI safer, and it just unveiled a service to help

This platform will help create a safe online environment by placing guardrails on AI-generated content.

What you need to know

- Microsoft recently shipped Azure AI Content Safety to broad availability.

- It's an AI-powered platform designed to create a safer online environment for users.

- Azure AI Content Safety allows businesses to establish more control by allowing them to tailor policies, ultimately ensuring that the content their customers consume aligns with their core values.

- The platform is now a standalone system, which means it can be used across open-source models.

Microsoft just shipped Azure AI Content Safety to general availability. It's an AI-powered platform designed to "help organizations create safer online environments." The platform leverages advanced language and vision models to flag inappropriate content such as violence.

Many organizations have hopped on the "AI train" in the past few months to leverage the technology's capabilities. Admittedly, these companies have made significant headway in their respective fields. Its benefits range from helping professionals develop software in under 7 minutes to assisting students solving complex math problems or even writing poems.

But what remains very apparent is that users have reservations about generative AI. Several users have indicated that the technology's accuracy is declining, while others have pointed out that it is getting dumber. The technology also lacks safety measures that would ideally regulate the content users consume while interacting with this technology.

Luckily, Azure AI Content Safety is in place to help organizations and businesses establish more control over the type of content that users are able to access while simultaneously ensuring that it aligns with their core values and policies.

The platform first debuted as part of the Azure OpenAI Service, but it's now a standalone system. Microsoft explained the platform in a blog post:

"That means customers can use it for AI-generated content from open-source models and other companies’ models as well as for user-generated content as part of their content systems, expanding its utility."

This affirms Microsoft's goal to provide businesses with effective tools essential to safely deploy AI technology.

Get the Windows Central Newsletter

All the latest news, reviews, and guides for Windows and Xbox diehards.

We’re at a pretty amazing moment right now where companies are seeing the incredible power of generative AI. Releasing Azure AI Content Safety as a standalone product means we can serve a whole host of customers with a much wider array of business needs.

Eric Boyd, Microsoft CVP-AI Platform

Azure AI Content Safety is big on adaptability

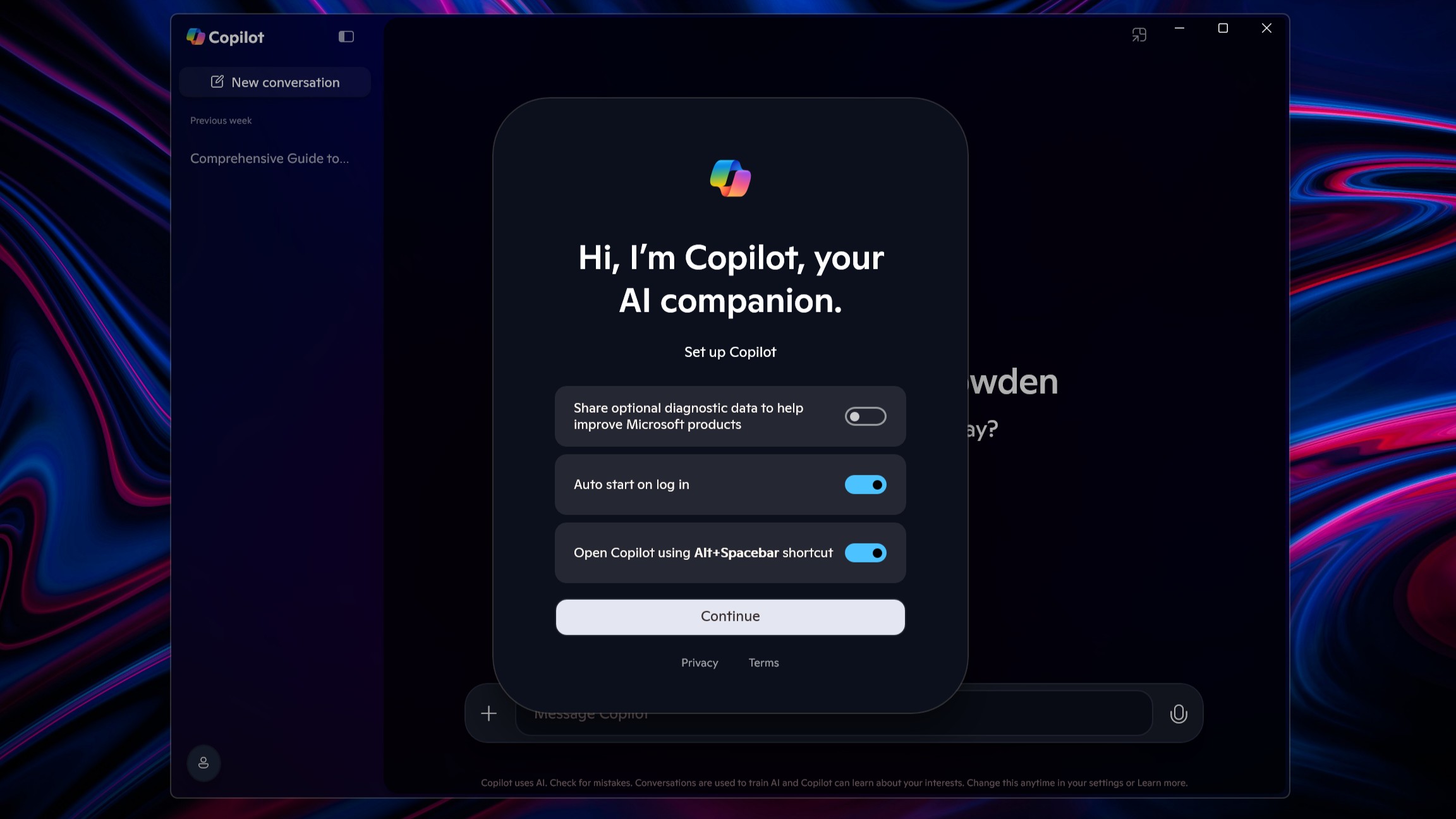

Microsoft already leverages Azure AI Content Safety to place guardrails on its own AI-powered products, such as GitHub Copilot, Microsoft 365 Copilot, and more. And now, the same capabilities are available for businesses and organizations. As such, it will be possible for them to tailor policies in a bid to better align them with the customer's needs and wants.

Kevin Okemwa is a seasoned tech journalist based in Nairobi, Kenya with lots of experience covering the latest trends and developments in the industry at Windows Central. With a passion for innovation and a keen eye for detail, he has written for leading publications such as OnMSFT, MakeUseOf, and Windows Report, providing insightful analysis and breaking news on everything revolving around the Microsoft ecosystem. While AFK and not busy following the ever-emerging trends in tech, you can find him exploring the world or listening to music.