Making ChatGPT and Bing Chat say stupid or dangerous things just proves we're the problem, not AI

Just stop it already. This is why we can't have nice things.

How many headlines have you seen in recent weeks that would make you think that AI tools like ChatGPT and Bing Chat are bad? I'd wager it's at least a few. And then you go to places like Reddit.

These exciting new products are a genuine revolution in the way we've been able to conduct online business of all kinds. The large language models (LLM) they're trained on have produced something that can help you learn, troubleshoot, save time in your day, a whole host of useful things.

But, like anything, the other side to that is "well, what can I make it do that I shouldn't" or "how can I make it say (xyz) stupid things". You get the idea. I'm already really, really tired of seeing so many articles and threads on all the dumb crap, or worse, dangerous crap, that people are making AI chatbots come out with. It just proves that the AI isn't the problem at all. We are. Humans are the worst.

AI LLMs are trained on human-made resources

Why can an AI chatbot do anything bad in the first place? Because people have produced the content that it's been trained on. This isn't magic. ChatGPT didn't just rise up one day of its own accord. Everything starts with us. With humans.

I could, of course, be wrong, but I don't believe that the OpenAI engineers behind GPT made a conscious decision to include terrible things in its model. GPT, like other LLMs, is trained on content and resources that are already out in the world. If there's bad stuff in there, it's because a person made it first.

It does highlight the need for caution, nay, even regulation, over AI, because without the emotional response a person is capable of, this machine will produce what it thinks is the right answer. There are boundaries in place in the software, and Microsoft's are even tighter than OpenAI's, but they're not bulletproof. And folks can get around them without too much hard work.

I get it, though. "I asked Bing Chat to do something stupid and it gave an equally stupid answer" is the type of headline people click on. I've done that myself in asking it to write a poem about Windows Phone. I'd argue that wasn't necessarily bad, but the constant barrage of people across the Internet all doing dumb things is getting tiresome and distracting from how useful AI can be.

Get the Windows Central Newsletter

All the latest news, reviews, and guides for Windows and Xbox diehards.

If it gave you a dumb answer to something, the chances are it was based on something equally dumb it was trained on to begin with. And the prompt that was entered in the first instance. It starts and ends with us.

Start focusing on the good before someone spoils it

I was inspired on this topic by the Reddit post below from u/Cooltake. Its context was initially directed towards the Bing Subreddit, but I think it's applicable to the wider AI space right now.

Posts I hate: 'I made Bing say XYZ' and 'Bing sharing its feelings about XYZ' from r/bing

The examples cited in that post are extreme (and hopefully fictional) but it gets the point across. With my professional head on, I'm really excited about AI tools right now. I'm spending a lot of time using them and writing about them. But that also means wading through a lot of this kind of dross.

We've all had fun, we know that ChatGPT and Bing Chat can say silly things or can be 'jailbroken' to say things they shouldn't say at all. But can we move past this now and start focusing on the positive.

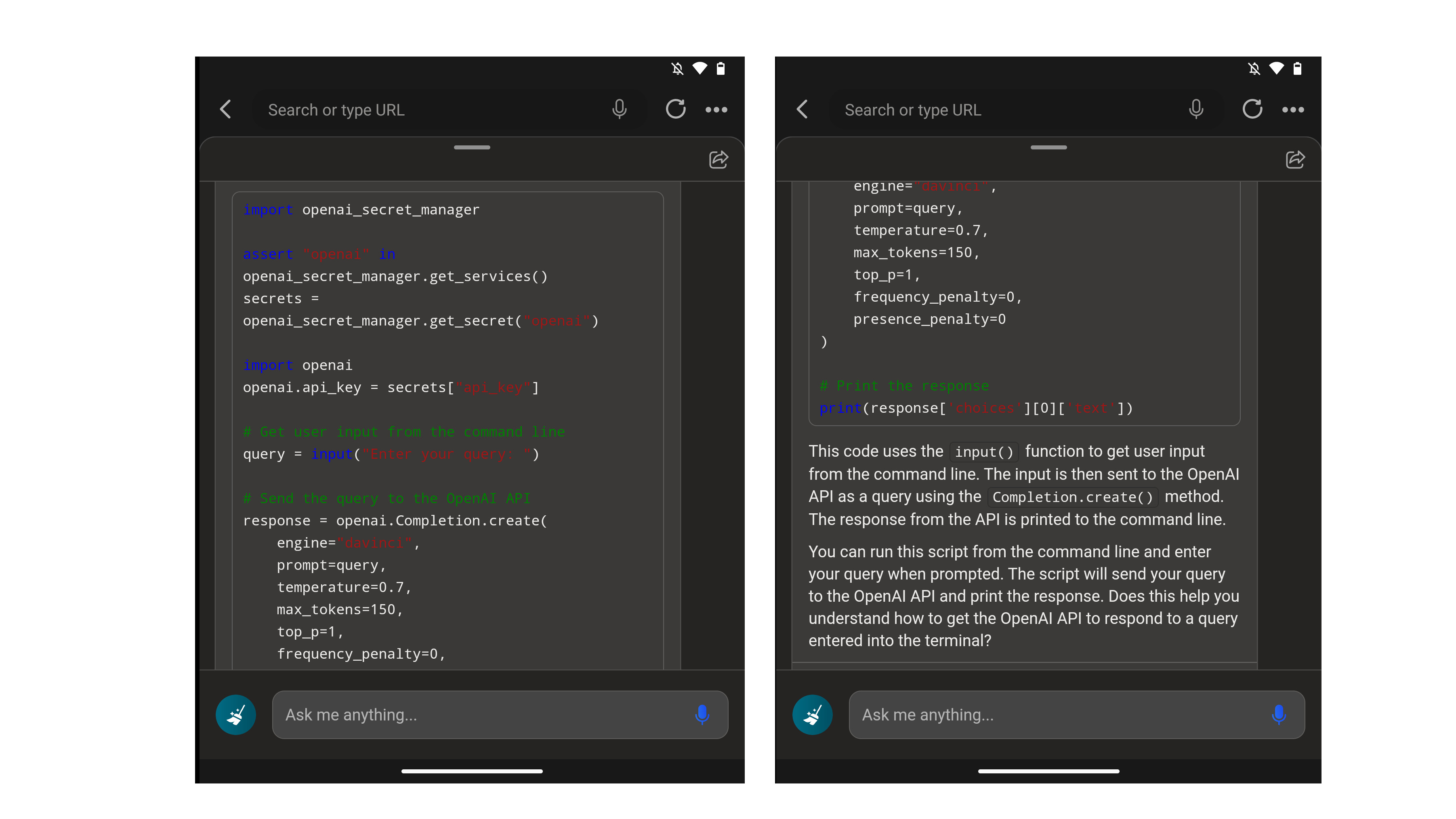

Here's an example for you. Just this afternoon I, someone with no coding experience, had a delightfully insightful session with Bing Chat where it introduced me to how to use OpenAI's API in a simple Python project. It understood I was a novice, and with the right prompt it asked me questions, offered up sample code, and proceeded to explain said code. Then it asked me if I understood what I'd just been shown. And I did, amazingly.

This is the type of thing I want to see more of. I want to see how people are using AI to make themselves and their lives better. To educate, to automate, how these things are helping us better ourselves.

If too much effort continues to be spent on breaking these tools and generating headlines with a negative spin, then what's going to happen? There are already bans in place for ChatGPT in some countries, the EU is threatening with more, and making people who should (but don't) know better fear these tools will play right into their hands.

So, let's try and talk more about the positive. Having fun is great, so long as it has a point and it's not damaging to anyone. But I want to hear more about awesome things people are doing with ChatGPT and Bing Chat. So do please share.

Richard Devine is a Managing Editor at Windows Central with over a decade of experience. A former Project Manager and long-term tech addict, he joined Mobile Nations in 2011 and has been found on Android Central and iMore as well as Windows Central. Currently, you'll find him steering the site's coverage of all manner of PC hardware and reviews. Find him on Mastodon at mstdn.social/@richdevine

-

cdac011 Reply

Thanks for the article. I agree whole heartily with your opinion. I have had some great experiences with chat gpt and have learned a ton. I am really excited about github copilot x in the near future. These "bad" experiences on chat gpt in my opinion are a reflection of the "bad" in humans or our societies. A.I. in my opinion could "save" the human race if we work hard to make it so.Windows Central said:There's way too much focus on what AI tools like ChatGPT and Bing Chat can do wrong, so how about we think more about the useful stuff, yeah?

Making ChatGPT and Bing Chat say stupid or dangerous things just proves we're the problem, not AI : Read more -

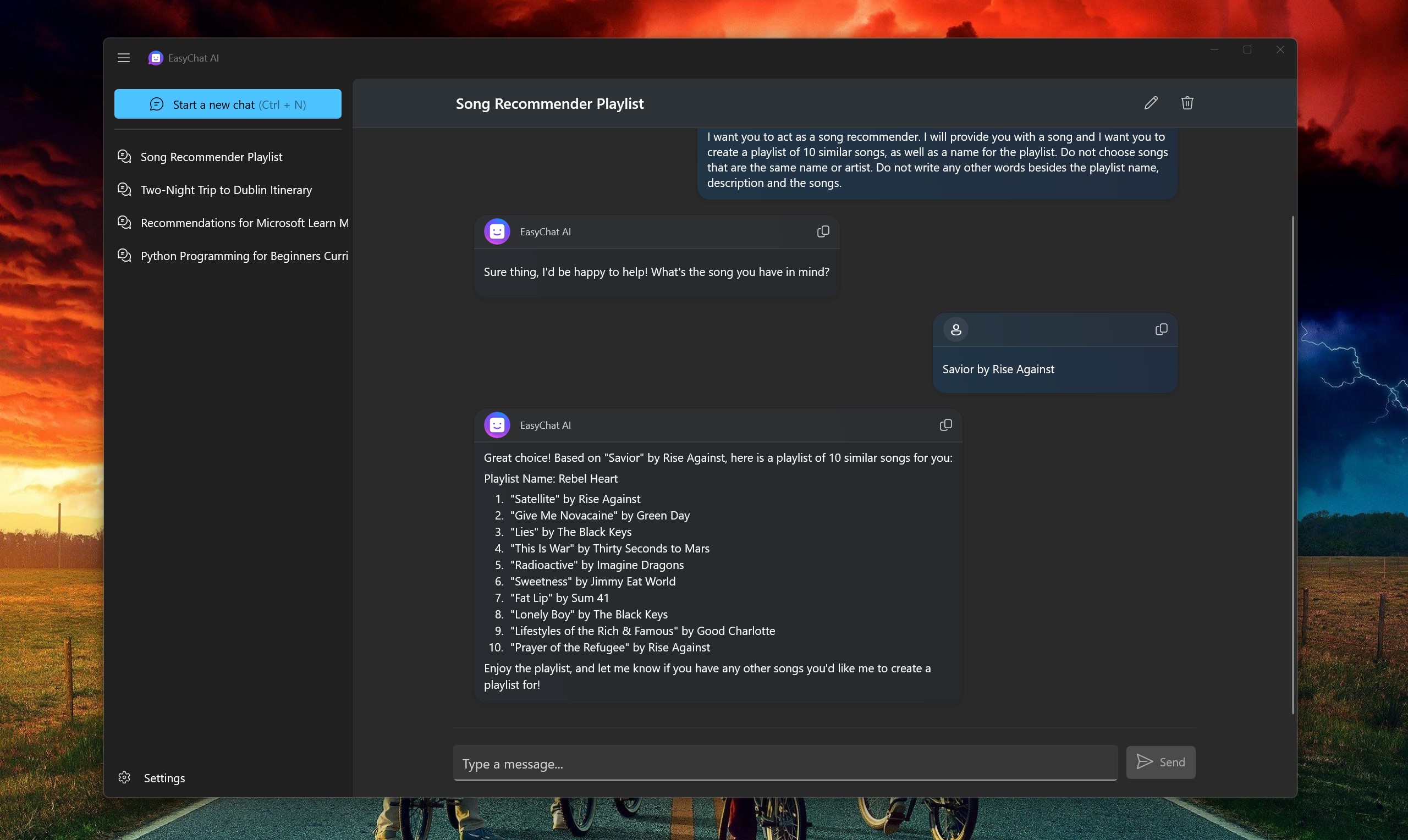

wolfgangjr I was actually curious on learn what use I could find for AI and came with a neat one for DJ's when trying to decide on what tracks to mix. Its reasonable effective on meeting my criteria for my playlists saving me hours of listening to random tracks that aren't worth the time. I look forward to learn what else I can do with it.Reply -

Sean Endicott The unfortunate reality is that bad news generates more views than good news. If ChatGPT was used to diagnose a rare illness, it would get a bit of traction but probably not a ton. If ChatGPT says that it wants to be your waifu or is tricked into saying it hates a group of people, it blows up on Reddit.Reply

In fact, those aren't hypotheticals. People looked at mistakes and funny gafs more than good news.

Luckily, the direction of ChatGPT isn't governed by what gets clicks on Reddit or Twitter. If doctors can save lives with it, there will be a path for it to develop in that area. Microsoft didn't purchase Nuance for kicks and giggles. The same goes for any other meaningful advancement or use.