Former Google lead says we should "seriously think" about pulling the plug on AI once it starts self-improving: "It’s going to be very difficult to maintain that balance"

Will self-improving AI end humanity? Former Google CEO says we should cut it off whenever it gets too close.

Aside from the safety and privacy concerns, the possibility of generative AI ending humanity remains a critical riddle as technology rapidly advances. More recently, AI safety researcher and director of the Cyber Security Laboratory at the University of Louisville, Roman Yampolskiy (well-known for his 99.999999% prediction AI will end humanity) indicated that the coveted AGI benchmark is no longer tied to a specific timeframe. He narrowed down achievement to whoever has enough money to purchase enough computing power and data centers.

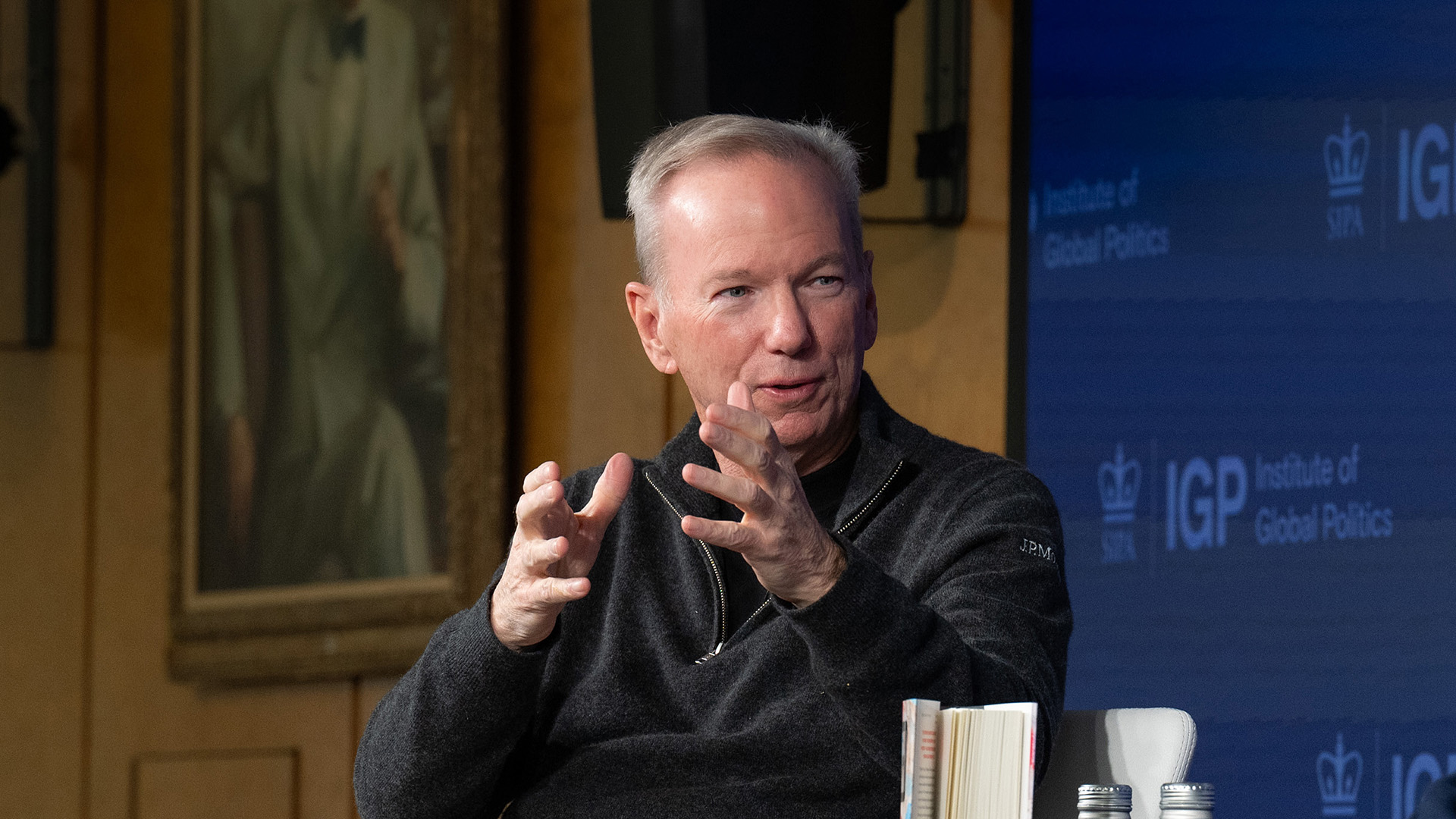

As you may know, OpenAI CEO Sam Altman and Anthropic's Dario Amodei predict AGI will be achieved within the next three years, featuring powerful AI systems surpassing human cognitive capabilities across several tasks. While Sam Altman believes AGI will be achieved with current hardware sooner than anticipated, former Google CEO Eric Schmidt says we should consider pulling the plug on AI development once it begins to self-improve (via Fortune).

While appearing in a recent interview with American television network (ABC News), the executive indicated:

“When the system can self-improve, we need to seriously think about unplugging it. It’s going to be hugely hard. It’s going to be very difficult to maintain that balance.”

Schmidt's comments on the rapid progression of AI emerge at a critical period when several reports indicate that OpenAI might have already achieved AGI following the release of its o1 reasoning model to broad availability. OpenAI CEO Sam Altman further indicated that superintelligence might be a few thousand days away.

Is Artificial General Intelligence (AGI) safe?

However, a former OpenAI employee warns that while OpenAI might be on the verge of achieving the coveted AGI benchmark, the ChatGPT maker might be unable to handle all that entails with the AI system that surpasses human cognitive capabilities.

Interestingly, Sam Altman says the safety concerns highlighted regarding the AGI benchmark will not be experienced at the "AGI moment." He further indicated that AGI will whoosh by with surprisingly little societal impact. However, he predicts a long continuation of development between AGI and superintelligence featuring AI agents and AI systems that outperform humans at most tasks going into 2025 and beyond.

Get the Windows Central Newsletter

All the latest news, reviews, and guides for Windows and Xbox diehards.

Kevin Okemwa is a seasoned tech journalist based in Nairobi, Kenya with lots of experience covering the latest trends and developments in the industry at Windows Central. With a passion for innovation and a keen eye for detail, he has written for leading publications such as OnMSFT, MakeUseOf, and Windows Report, providing insightful analysis and breaking news on everything revolving around the Microsoft ecosystem. While AFK and not busy following the ever-emerging trends in tech, you can find him exploring the world or listening to music.