ChatGPT and DALL-E 3 AI-generated images are now watermarked, though OpenAI admits it's "not a silver bullet to address issues of provenance"

It's now easier for people to identify AI-generated images.

What you need to know

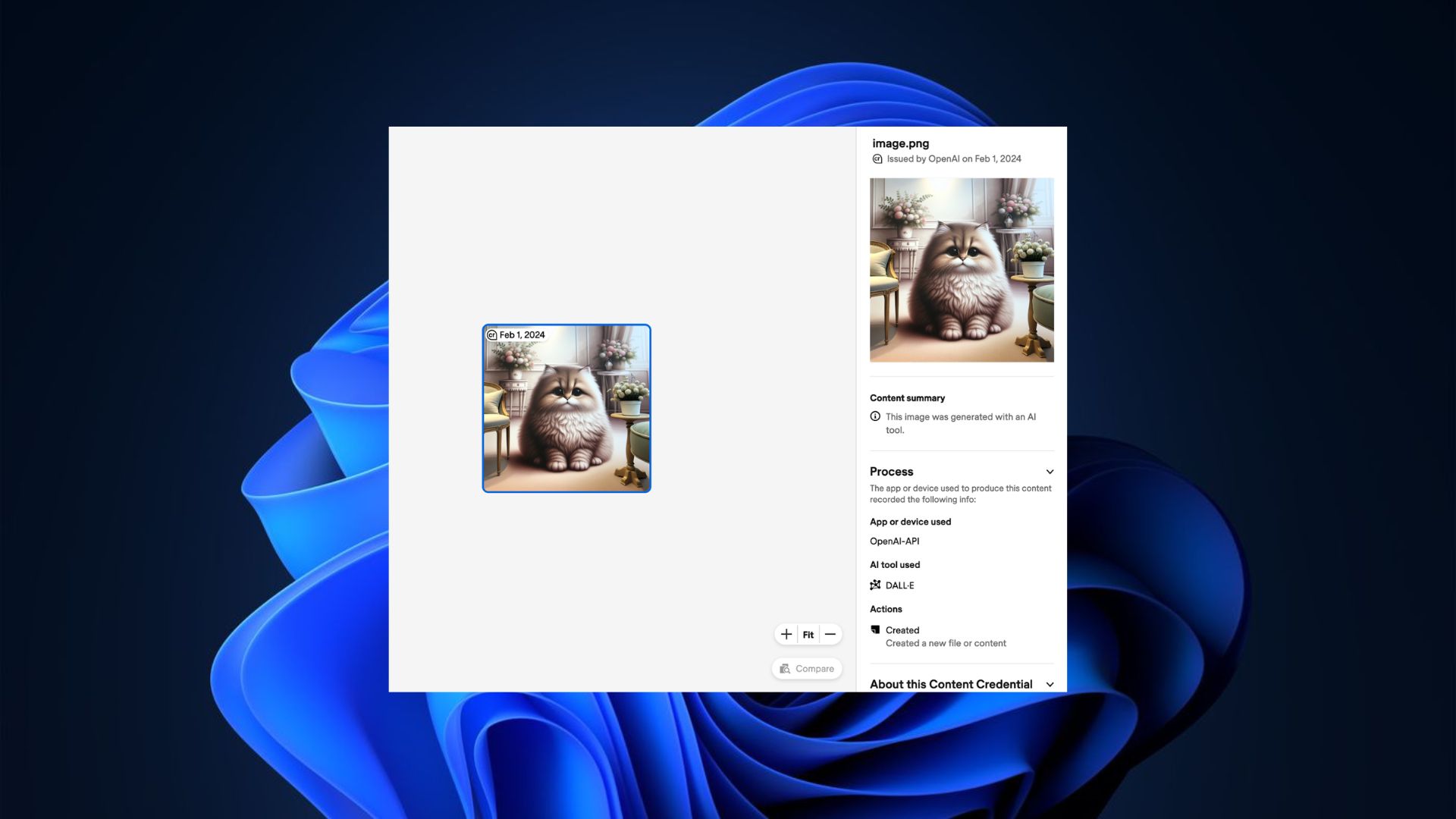

- OpenAI recently announced that it will now watermark images generated using ChatGPT or API serving the DALL-E 3 technology.

- This will help users identify images generated using these AI tools, clearly demystifying them from actual/real pictures.

- This change only applies to images; it won't affect AI-generated voice or text.

Amid the prevalence of AI deepfakes and misinformation across the web, OpenAI announced steps being taken to combat this issue. Images generated using ChatGPT or API serving the DALL-E 3 technology will now feature watermarks. This is the company's bid to promote more transparency, as the watermark will inform people that an image is AI-generated.

The watermark will include detailed information, including when the image was generated and the C2PA logo. However, OpenAI admits that incorporating the Coalition for Content Provenance and Authenticity (C2PA) into DALL-E 3 tech and ChatGPT isn't "a silver bullet to address issues of provenance." The company further highlighted that:

"Metadata like C2PA is not a silver bullet to address issues of provenance. It can easily be removed either accidentally or intentionally. For example, most social media platforms today remove metadata from uploaded images, and actions like taking a screenshot can also remove it. Therefore, an image lacking this metadata may or may not have been generated with ChatGPT or our API."

It's worth noting that this change may slightly impact the file sizes for images generated using these tools. However, this won't affect the quality of the pictures. Here's how the C2PA metadata may impact the size of AI-generated images:

- 3.1MB → 3.2MB for PNG through API (3% increase)

- 287k → 302k for WebP through API (5% increase)

- 287k → 381k for WebP through ChatGPT (32% increase)

The change is expected to roll out to mobile users next week on February 12, 2024.

Watermarking is a step in the right direction, but is it enough?

One of the things that fascinates me about generative AI is the capability to leverage the technology to generate life-like images using text-based prompts within seconds. AI-powered tools designed for this specific purpose, like Image Creator from Designer (formerly Bing Image Creator) and ChatGPT, have only improved with time.

However, it's not been all fun and glam. Microsoft's Image Creator from Designer leverages OpenAI's DALL-E 3 technology, which pushed its image generation capabilities to the next level. Admittedly, the AI tool generates better-looking images compared to previous versions.

Get the Windows Central Newsletter

All the latest news, reviews, and guides for Windows and Xbox diehards.

Unfortunately, the hype was short-lived due to the increased number of reports surfacing online, citing instances where the tool was used to generate offensive and explicit content. This forced Microsoft to put guardrails in place and heighten censorship on the image generation tool to prevent misuse.

While these measures play a crucial role in establishing control over the tool, some users felt like Microsoft went a little over the top with censorship, ultimately lobotomizing it.

RELATED: Google's new image generation tool lets you fine-tune outputs

A few weeks ago, explicit images of well-known pop star Taylor Swift went viral across social media platforms, only for the truth to come to light later on, revealing that they were AI deepfakes believed to be generated using Microsoft Designer.

It seems the more censorship these tools undergo, the more it becomes difficult to fully explore and leverage their capabilities.

Kevin Okemwa is a seasoned tech journalist based in Nairobi, Kenya with lots of experience covering the latest trends and developments in the industry at Windows Central. With a passion for innovation and a keen eye for detail, he has written for leading publications such as OnMSFT, MakeUseOf, and Windows Report, providing insightful analysis and breaking news on everything revolving around the Microsoft ecosystem. While AFK and not busy following the ever-emerging trends in tech, you can find him exploring the world or listening to music.

-

Kevin Okemwa Watermarks on AI-generated images is a step in the right direction and might help identify deepfakes, but will it be enough to remedy this situation? Share your thoughts with meReply -

TheFerrango Probably not. Watermarks may be forced into large, consumer used LLMs and generators, but on-premise models and large commercial ones won't be verifiable. It's a step in the right direction, but it's nowhere near being a solutionReply -

Kevin Okemwa Reply

I think so too. More censorship and guardrails may eventually impact the quality of the service offered by these tools as well.TheFerrango said:Probably not. Watermarks may be forced into large, consumer used LLMs and generators, but on-premise models and large commercial ones won't be verifiable. It's a step in the right direction, but it's nowhere near being a solution -

TheFerrango Reply

Oh yeah, I didn't mention regulations and censorship because I have mixed feelings about them, and have limited power anywayKevin Okemwa said:I think so too. More censorship and guardrails may eventually impact the quality of the service offered by these tools as well.