Microsoft's cognitive services and AI everywhere vision are making AI in our image

Microsoft is positioning itself as the world's platform for artificial intelligence, and that's a smart move.

In 2014 I wrote that Microsoft's Cortana would be the next big thing. I may be right. Redmond's vision for its johnny-come-lately AI is that it, like the GUI before it, will be pivotal in the evolution of the personal computing user interface.

Microsoft's ambitions for Cortana were evident in 2014.

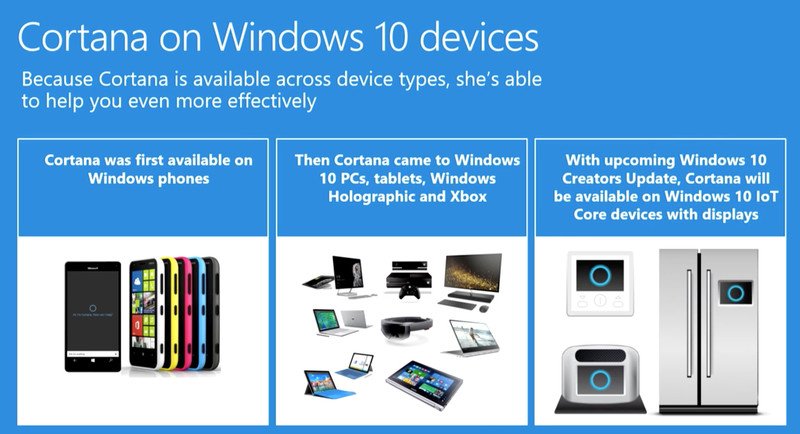

Microsoft envisions an unbounded AI that developers and partners will incorporate into a range of everyday and innovative devices enabled by the Cortana SDK.

They also see an AI that will follow users across platforms and devices while retaining access to all of their digital information.

Through Microsoft's Home Hub, a diverse range of appliances, and third-party Amazon Echo-like devices, Redmond is positioning Cortana and a cadre of bots as ambient, ever-present intelligences.

The fluid human-AI interactions science-fiction has primed us for are still a long way off, though. As the perceptive powers and intelligence of AI systems evolve, however, "inputting" data about our world, actions, and intentions will continue to become more natural.

Microsoft realizes the goal of a fluid human-AI relationship requires a human-AI symbiosis now to evolve the systems knowledge-base, perceptions and behavior around meeting human needs.

Heads literally in the Cloud

Through the world's first cloud-based AI supercomputer and its Cognitive Services Microsoft is investing in a comprehensive approach to improving both AI's intelligence and "senses," and thereby, our interactions with AI systems.

Get the Windows Central Newsletter

All the latest news, reviews, and guides for Windows and Xbox diehards.

Microsoft's corporate VP of products for technology and research Andrew Shuman adds:

…it's important...for us to make careful choices so that this technology ultimately translates into welfare for all, and that means design principles that focus on the human benefit of AI, transparency and accountability.Ultimately...humans and machines will work together to solve society's greatest challenges, to create magical experiences and change the world.

Part of the magic will be derived from the unique Azure-based AI supercomputer Microsoft has constructed.

Microsoft's AI supercomputer will power their AI vision.

This cloud-based intelligence is capable of translating all of Wikipedia in less than a tenth of a second, and the 38 million books in the Library of Congress in 76 seconds. It is this intelligence that Microsoft, as the platform and "do more" company, is making available to individuals and organizations. Nadella said:

...we are focused on empowering both people and organizations, by democratizing access to intelligence to help solve our most pressing challenges. To do this, we are infusing AI into everything we deliver across our computing platforms and experiences.

This isn't a purely altruistic move, Microsoft needs humans to make its AI better.

It takes two to tango

Microsoft has a four-pronged approach to its AI vision. They're harnessing agents, like Cortana, to facilitate the human-AI relationship, infusing every application everywhere with intelligence, making intelligent services accessible to all developers and building infrastructure via the world's most powerful AI computer and making it available to everyone.

To achieve the first three objectives, Microsoft is leveraging the advantage of its pervasive products and services. By infusing Cortana and other intelligence throughout its services ecosystem (and applications with which it interacts) and opening the cognitive services that power them to developers, Redmond will build a market for its AI. They will also glean massive amounts useful data.

This data will be synthesized into Microsoft's Azure-based AI supercomputer (objective four) to continually evolve it as it interacts with millions of users, and addresses millions of scenarios daily. Nadella bragged, "We want to bring intelligence to everything, to everywhere, and for everyone." This human/AI co-dependence, or symbiosis, will help foster the evolution required to make AI more useful, mature AI/human interaction and build the trust Microsoft hopes humans will eventually invest in AI systems.

Human-AI interaction now is key to developing useful AI systems.

Naturally, a system's ability to integrate into our lives by "perceiving" what we perceive and fluidly responding to our needs and actions is key to overcoming the disjointed, almost forced, interactions we have today. If Microsoft's investments in "Cognitive Services" (which endows AI with human-like perceptions) pay off, the unnatural focus we give our digital assistants to solicit their support may be overcome.

Becoming human

Microsoft's Cognitive Services include vision, face, emotion, video, language and other APIs that are positioned to endow AI systems with human-like perceptions. If developers embrace these tools evolving AI will potentially be capable of greater autonomy by proactively acting on what they "perceive." Shuman added:

Microsoft released a whole set of APIs…that allow for a lot more natural software experience...

Imagine a refrigerator-embedded Cortana suggesting a favorite ice cream to a family member who she detects is sad based on face and emotion APIs. Her proactive suggestion would be founded on what she has learned about that family member over time as part of Microsoft's Home Hub vision.

AI will become a more natural and integrated part of our families.

The ubiquity achieved via a democratized Cortana combined with the power of an AI supercomputer, and expanded cognitive abilities would enable a much more fluid AI/human interaction than we see today. Nadella extols Microsoft's progress:

"There are a few companies that are at the cutting-edge of AI…But when you just look at the capability around speech recognition, who has the state of the art? Microsoft does…image recognition? Microsoft again, and those…are judged by objective criteria."

Additionally, Microsoft's AI recently reached parity with professional human transcriptionists when transcribing human dialogue. As AI's range of perception expands and becomes smarter, parity with human abilities may segue to parity with human behavior.

Final Thoughts

It will be interesting to see AIs evolution in relation to Microsoft's mobile and AR visions. A collision of these technologies with an ultra-mobile pen-focused cellular Surface (geared toward written/typed language interaction), paired with AR glasses (geared toward spoken language and gesture interaction) would be an interesting intersection for Microsoft's Conversations as a Canvas vision.

How might such a union of technology capture our natural, and oh so human, hodge-podge of verbal and non-verbal communication?

Jason L Ward is a columnist at Windows Central. He provides unique big picture analysis of the complex world of Microsoft. Jason takes the small clues and gives you an insightful big picture perspective through storytelling that you won't find *anywhere* else. Seriously, this dude thinks outside the box. Follow him on Twitter at @JLTechWord. He's doing the "write" thing!