Why you should question Consumer Reports' Microsoft Surface reliability claims

A recent Consumer Reports' (CR) study calls out Microsoft's Surface line. Here is where it gets things right, wrong, and why it differs so much from a similar report from J.D. Power.

CR recently made headlines by chasing down another prominent laptop manufacturer and chiding them very publicly for reliability. Microsoft's Surface line is the target, whereas last year CR notoriously went after Apple's new MacBook Pro lineup for battery life issues, making similar headlines.

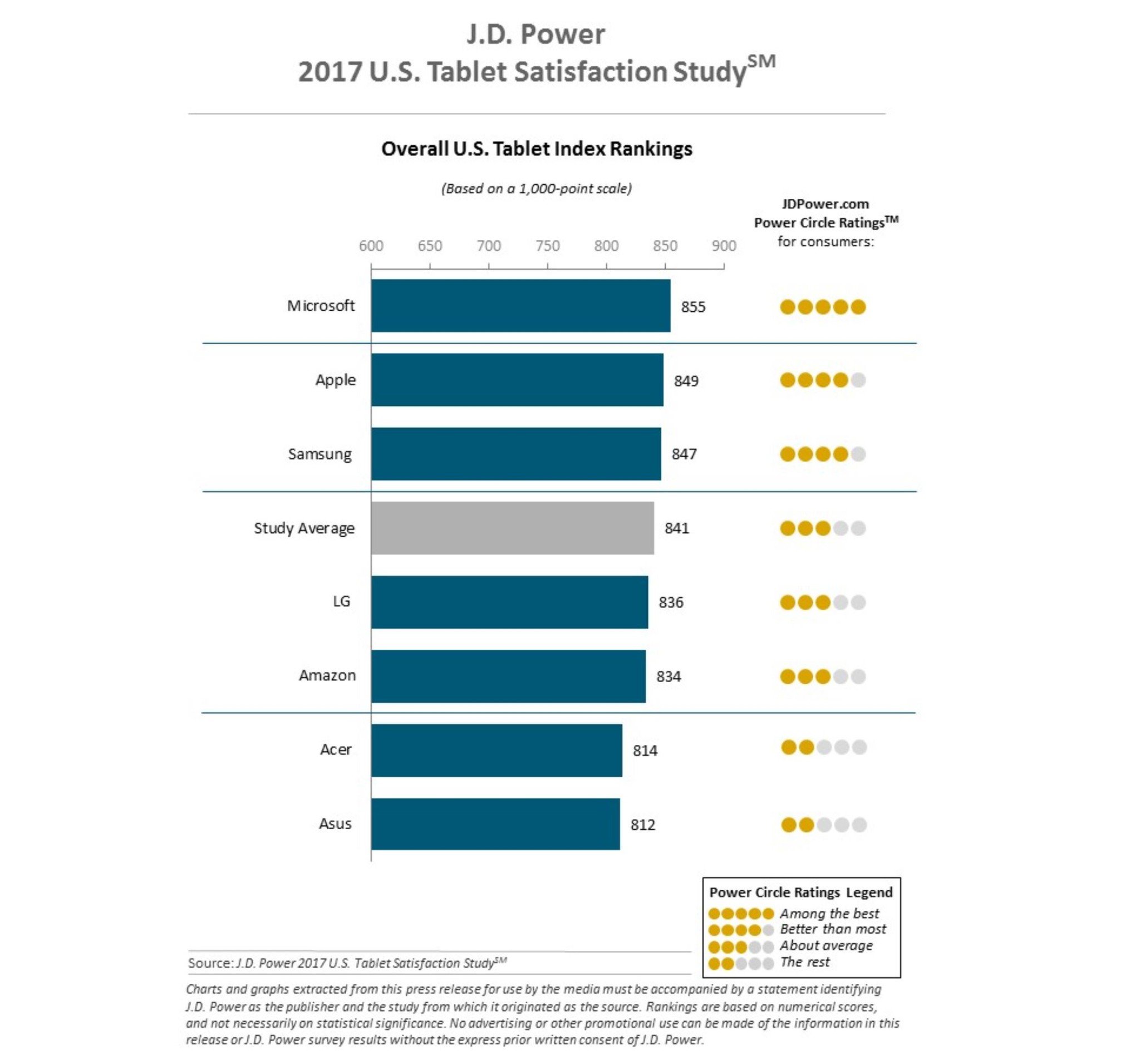

The new report dramatically differs from the 2017 J.D. Power Ratings for tablets, which was published in April. In that study, Surface beat out Apple for the No. 1 spot in customer satisfaction.

How can two reputable sources completely contradict each other? The devil, as they say, is in the details.

Reliability vs. user satisfaction

Perhaps the most significant difference between what CR and J.D. Power report comes down to focus. CR asks explicitly about reliability where J.D. Power is user satisfaction.

While there is some overlap between reliability and user satisfaction they are technically independent variables. For example, a user could enjoy the Surface, but have reliability problems in the future.

Indeed, it is not evident that J.D. Power even inquires about dependability. Instead, it asks about Surface ease of operation, features, styling, and design, and cost. That favors CR's claim, but since the methodology is undefined (more on that below) it's just a black box of "a few users" said something negative about Surface.

In fact, CR never defines what it even means by reliability and how it's quantified. It seems to range from the device shutting down to full on hardware failure, but we just do not know since CR do not tell the reader.

Get the Windows Central Newsletter

All the latest news, reviews, and guides for Windows and Xbox diehards.

Comparing methodology

In science, assertions are written in papers, which are then submitted to journals for publication. The peer review process is grueling. I know, because I was once a Ph.D. student who went through the process numerous times. Picking apart methodology is the go-to target when reviewing any empirical claim.

Unfortunately, neither Consumer Reports nor J.D. Power is peer reviewed. As a result, you must rely on what both firms tell you, and often the methodology is opaque. Still, both companies share some details about how they conduct studies and arrive at conclusions.

Here is how Consumer Reports compares with J.D. Power for its Surface studies:

| Publication | Consumer Reports | J.D. Power |

|---|---|---|

| Style | Survey | Survey |

| Source | CR subscribers | Randomized sample |

| Subject | Reliability | User satisfaction |

| Sample size | 90,741 | 2,238 |

| Conclusion | Estimated | Quantified |

Consumer Reports relies on subscriber surveys – that is, people who pay Consumer Reports either online or for the magazine. J.D Power, however, chooses respondents randomly and contacts them through the mail, telephone, or email.

J.D. Power shares more detail on its methodology:

We go to great lengths to make sure that these respondents are chosen at random and that they actually have experience with the product or company they are rating. For example, ratings for the Lexus IS vehicle come from people who actually own one. As a result, J.D. Power ratings are based entirely on consumer opinions and perceptions.

A red flag immediately comes up for Consumer Reports, whose demographic shifts towards older individuals versus controlling for age and other demographics (or at least ensuring randomness). Consumer Reports is not exactly something that people under 40 are likely subscribing to these days. Meanwhile, J.D. Power specifically highlights that "Microsoft also has a higher proportion of younger customers than their competitors."

None of that necessarily means Consumer Reports is wrong or that older folks respond differently than younger ones. However, when it comes to fixing problems with the OS — like when Consumer Reports mentions "their machines froze or shut down unexpectedly" — there could be an age-related bias.

Consumer Reports, however, should benefit from a much larger sample size: 90,000 versus only 2,000 for J.D. Power. While 2,000 respondents may not sound like a lot for statistical purposes, it is the baseline number for many political and commercial survey groups.

Both studies, however, fail for sampling size since neither tell us what percentage of its data are actual owners of a Surface. Both surveys are general tablet (J.D. Power) or tablet and laptop (CR) ones and not specifically Surface-only. One study could have 1,000 Surface owners and the other 20; or vice versa. That's a huge problem.

J.D. Power mentions that respondents have had their devices for less than one year, whereas Consumer Reports does not denote such data. That matters for the reliability part. especially since Consumer Reports is making claims beyond two years of ownership. J.D. Power's results by default do not count long-term experiences with the product, which is where CR is calling out Microsoft and Surface.

J.D. Power does provide much more detail from its survey, including breaking it down by percentages and categories:

Customers using Microsoft tablets are more likely to be early adopters of technology. More than half (51 percent) of Microsoft customers say they 'somewhat agree' or 'strongly agree' that they are among the first of their friends and colleagues to try new technology products. This is relevant because early adopters tend to have higher overall satisfaction (879 among those who 'somewhat agree' or 'strongly agree' with this statement versus 816 among those who do not).The U.S. Tablet Satisfaction Study, now in its sixth year, measures customer satisfaction with tablets across five factors (in order of importance): performance (28 percent); ease of operation (22 percent); features (22 percent); styling and design (17 percent); and cost (11 percent).

Consumer Reports remains much vaguer in comparison:

A number of survey respondents said they experienced problems with their devices during startup. A few commented that their machines froze or shut down unexpectedly, and several others told CR that the touch screens weren't responsive enough.

Anyone submitting a scientific paper would be ridiculed for using such nondescript language as "a number of" or "a few" when describing a data set.

Furthermore, you can pick apart J.D. Power's results more than CR; they admit an age bias where "early adopters tend to have higher overall satisfaction." J.D. Power's results show that Surface owners are younger and more likely to be early adopters skewing towards greater user satisfaction.

CR does not detail such information making any analysis of its data pointless.

This is not the first time CR's methodology has been questioned. Its original MacBook Pro review for the 2016 refresh had glaring flaws that were called out by many, including Apple.

To avoid such nitpicking in the future CR should simply be more transparent on its data set, define its terms, detail its methods, and publish actual numbers.

Projections are not facts

The biggest issue I have with the Consumer Reports' study is that its conclusion is reached by estimating future trends based on older ones for devices not actually surveyed:

Predicted reliability is a projection of how new models from each brand will fare, based on data from models already in users' hands.Consumer Reports National Research Center estimates that 25 percent of Microsoft laptops and tablets will present their owners with problems by the end of the second year of ownership.

While projections are not bad – in fact, they are useful – CR extends its conclusion to the new Surface Pro (2017) and Surface Laptop. But CR admits its survey only applies to devices "bought new between 2014 and the beginning of 2017", which pre-dates availability of those PCs.

In other words, while it is fair to lump together the Surface 3, Surface Pro 3, Surface Pro 4, and Surface Book, CR is merely assuming the same trend applies for Surface Pro (2017) and Surface Laptop. The new Surface Pro and Surface Laptop could even be worse for reliability, but we do not know, and CR's survey does not account for them. Nonetheless:

The decision by Consumer Reports applies to Microsoft devices with detachable keyboards, such as the new Surface Pro released in June and the Surface Book, as well as the company's Surface Laptops with conventional clamshell designs.

To its credit, CR admits its survey data of older devices contradicts its lab testing of the new Surface Pro:

Several Microsoft products have performed well in CR labs, including the new Microsoft Surface Pro, which earned Very Good or Excellent scores in multiple CR tests. Based purely on lab performance, the Surface Pro is highly rated when used either as a tablet or with a keyboard attached.

None of these critiques are slam dunk dismissals of CR's finding but rather considerations when accounting for its results.

Don't forget that Surface had real problems

Microsoft already has a black eye on the Surface line. The Surface Pro 4 and Surface Book had a spectacularly bad launch in late 2015. Intel's new Skylake processor platform, combined with the nascent Windows 10 OS, resulted in severe reliability problems for standby, slow Wi-Fi, Windows Hello, "blue screens," and more.

If you bought a Surface Pro 4 or Surface Book between October 2015 and May 2016, you likely had plenty of complaints. It was so bad that the future of the whole Surface endeavor was reportedly in question at Microsoft.

Going back further, Microsoft had issues with the standardized Micro-USB charging port on the Surface 3 where customers lost its charger but confusingly could not recharge it with their phones' Micro-USB adapters (not enough power), resulting in complaints.

Surface Pro 3, likewise, had some early dead pixel problems and fan reliability issues. Even Surface Pro 2 had overheating components.

Despite those issues, however, Microsoft is learning from its mistakes. Usability and reliability with Windows 10 across all hardware are up, and the Surface Pro and Surface Laptop seem to have stabilized. There has been nary a peep from Surface Studio owners about reliability as we near its one-year debut. Even the assumed "Alcantara-gate" for Surface Laptop (so far) has not panned out with seemingly no mass returns based on feared staining.

In the end, the damage to Microsoft by CR rests more on CR's fading reputation than sound methodology. Many older readers will just decide a future PC purchase based on its reliability estimate.

While it may seem uncouth to accuse CR of trying to garner attention as it becomes increasingly outdated, there are holes in its game. Unfortunately, that may not matter as the damage has been done. It is now up to Microsoft to ensure that current and future generations of Surface do not garner such a reputation, whether it's deserved or not.

Daniel Rubino is the Editor-in-chief of Windows Central. He is also the head reviewer, podcast co-host, and analyst. He has been covering Microsoft since 2007 when this site was called WMExperts (and later Windows Phone Central). His interests include Windows, laptops, next-gen computing, and wearable tech. He has reviewed laptops for over 10 years and is particularly fond of 2-in-1 convertibles, Arm64 processors, new form factors, and thin-and-light PCs. Before all this tech stuff, he worked on a Ph.D. in linguistics, performed polysomnographs in NYC, and was a motion-picture operator for 17 years.