When Microsoft's AI-driven camera and mixed reality missions meet...

AI and mixed reality are technologies Microsoft, and other tech giants see as the future of computing. What type of experiences might Microsoft's investments in each yield as mixed reality mixes with AI?

Mixed reality is the continuum from augmented reality (AR) to virtual reality (VR). The former superimposes holograms in a user's field of view via wearable technology such as Microsoft's AR headset HoloLens. The latter immerses wearers in virtual environments via VR headsets like the Oculus Rift and Mixed Reality headsets. A convergence of AR and VR on a single, untethered wearable, where lenses transition between clear (AR) and opaque (VR) is the technology's goal.

AI is the field of computer science that seeks to create intelligent machines that can behave, work and react like humans. AI has already begun permeating our lives. Intelligence-imbued systems are part of manufacturing, computer security, apps such as Microsoft's Seeing AI and Hearing AI, messaging platforms like Google's Allo, smartphone cameras, a host of digital assistants and more. Microsoft's and Google's leaders Satya Nadella and Sundar Pichai, respectively, have made AI a driving force in their company's missions.

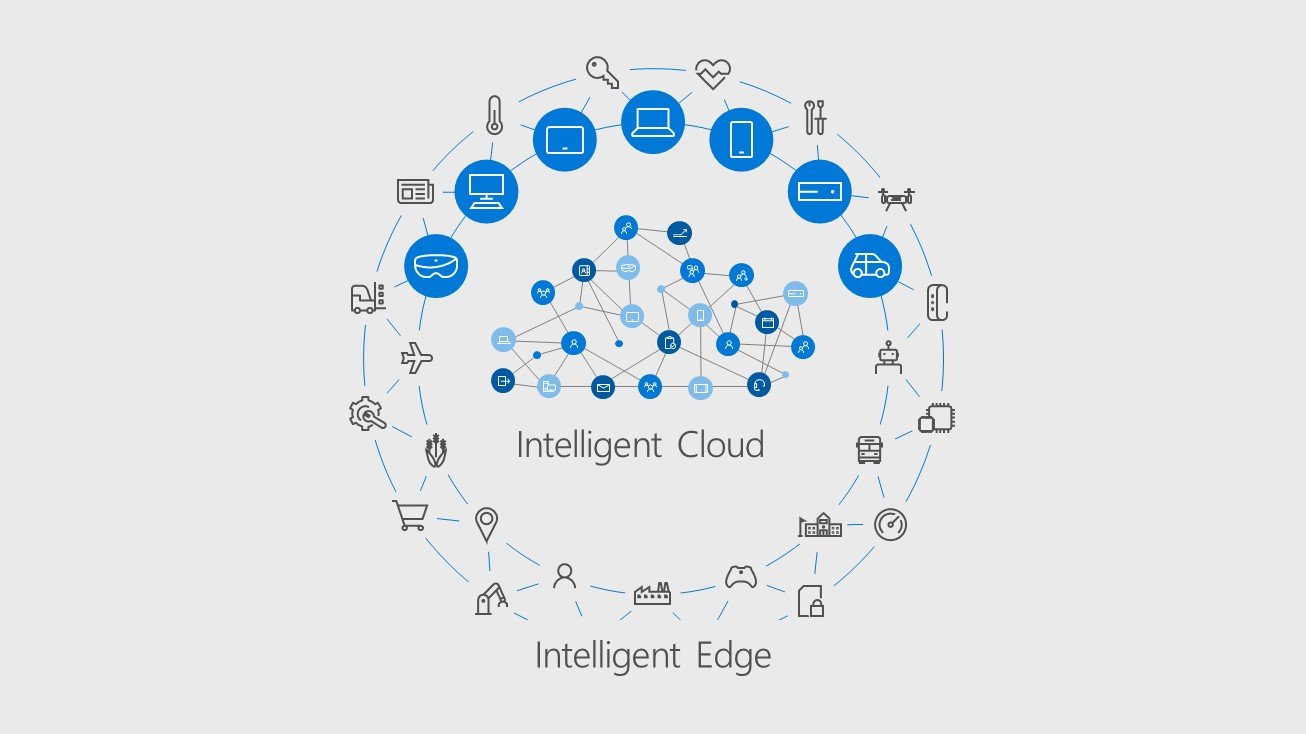

Via its Conversations as a Platform and Bot's Framework strategies, Microsoft is striving to infuse intelligence into its products, services, and IoT. Additionally, Microsoft's intelligent cloud, intelligent edge, and 5G are evolving platforms that are key to supporting AI-driven products and services. Given AR's potential as the future of computing and AI's evolution, how might the fusion of the two be implemented in business, healthcare and among consumers in the future?

HoloLens 2.0 introduces on-device AI

When HoloLens debuted in 2015 even Microsoft's critics were impressed with this wearable computer that projected interactive holograms into a wearers world. Limited FOV issues, cost and lack of a consumer version has since tempered the excitement. Still, strategic positioning in specific industries (from healthcare to education to manufacturing) and Windows Mixed Reality (formerly Windows Holographic) as a growing platform has kept Microsoft's AR vision moving forward.

In fact, the next version of HoloLens is expected in 2019, a year earlier than initially planned and will be sporting a custom AI coprocessor. This coprocessor will be part of HoloLens' Holographic Processing Unit (HPU), a multiprocessor which currently helps make HoloLen's the first and only fully contained Holographic computer. The AI coprocessor will enable HoloLens to implement Dynamic Neural Networks (DNN) natively. Let me explain.

Deep learning is important for computer vision and other recognition tasks computer systems, like HoloLens, require to "understand" their environment. Neural networks are systems devised to mimic parts of the human brain to enable computer systems to approximate processing information the way we do. This is fundamental to the infrastructure that is critical to the advancement of AI. Microsoft's AI coprocessor for HoloLens 2.0 will run continuously off of the HoloLens battery allowing a consistent AI presence in the mixed reality headset. Marc Pollefeys, director of science for HoloLens said:

Get the Windows Central Newsletter

All the latest news, reviews, and guides for Windows and Xbox diehards.

This is the kind of thinking you need if you're going to develop mixed reality devices that are themselves intelligent. Mixed reality and artificial intelligence represent the future of computing, and we're excited to be advancing this frontier.

Intelligent edge, AI-driven cameras and mixed reality collide

AR devices must be able to "perceive" and "understand" their environment and convey relevant data through overlaid images in the wearer's field of view. This is where Microsoft's AI-driven camera technology comes in. It has a profound ability to perceive the physical world, understand activity, recognize objects and people and proactively act on what it sees. Microsoft is marketing this ability to search and catalog people, things and activity in the real world (as easy as search engines search digital data) as the merger of the digital and physical worlds. Fusing this level of AI "perception" of the physical world with AR, the future of computing that blends digital and physical environments, is a logical next step.

Microsoft's AI-driven camera tech and AR will likely merge.

AI capabilities are poised to increase and become more relevant as edge computing becomes more pervasive. 5G networks, which will begin rolling out in 2018, will provide the infrastructure for the immense amounts of data processing at the clouds edge necessary to support practical AI scenarios. Lower latency and distributed networks also benefit from 5G, which may also support collaborative intelligent AR settings.

Future AI-infused HoloLens or HoloLens-inspired devices, connected to the intelligent edge in enterprise and other settings may have profound implications for business and life; especially if merged with Microsoft's AI-driven camera tech.

AI, mixed reality and the future workplace

Employees of the future (particularly in specific industries) may don company-issued mixed reality glasses as their PCs. Desktop PCs tether employees to desks which can negatively affect productivity when employees need to be mobile even within the workplace. Cell phones, which provide a degree of freedom, are just another screen that must be retrieved then focused on. This is neither a natural nor efficient way to access, interact with or communicate information. Additionally, for particular types of employees who could benefit from easy access to certain information throughout the day (those on manufacturing floor), phones and desktops are impractical.

Microsoft suggests smartglasses will replace smartphones

Wearable computers (AR glasses) may solve for these and other challenges. Imagine a team of machine workers wearing intelligent connected headsets that provide feedback on their productivity throughout the day. Or technical support who sees equipment statuses in real time from anywhere. Technicians remotely guiding minor fixes via AR headsets or directly facilitating hologram-supported fixes are also possible scenarios. Supervision of staff productivity and activity by intelligent wearable-equipped managers could also be enhanced. This could give a whole new meaning to micro-managing, however.

AI, using the wearable's camera, could support inventory control and process stock much faster than humans. The same can be said of safety checks and other manual evaluations of equipment and the work environment. AI could proactively provide information in the wearer's FOV and in a connected environment, proactively update everyone else who needs the information. Microsoft even demonstrated how Microsoft Teams allows AR data to be conveyed to more traditional mediums like PCs.

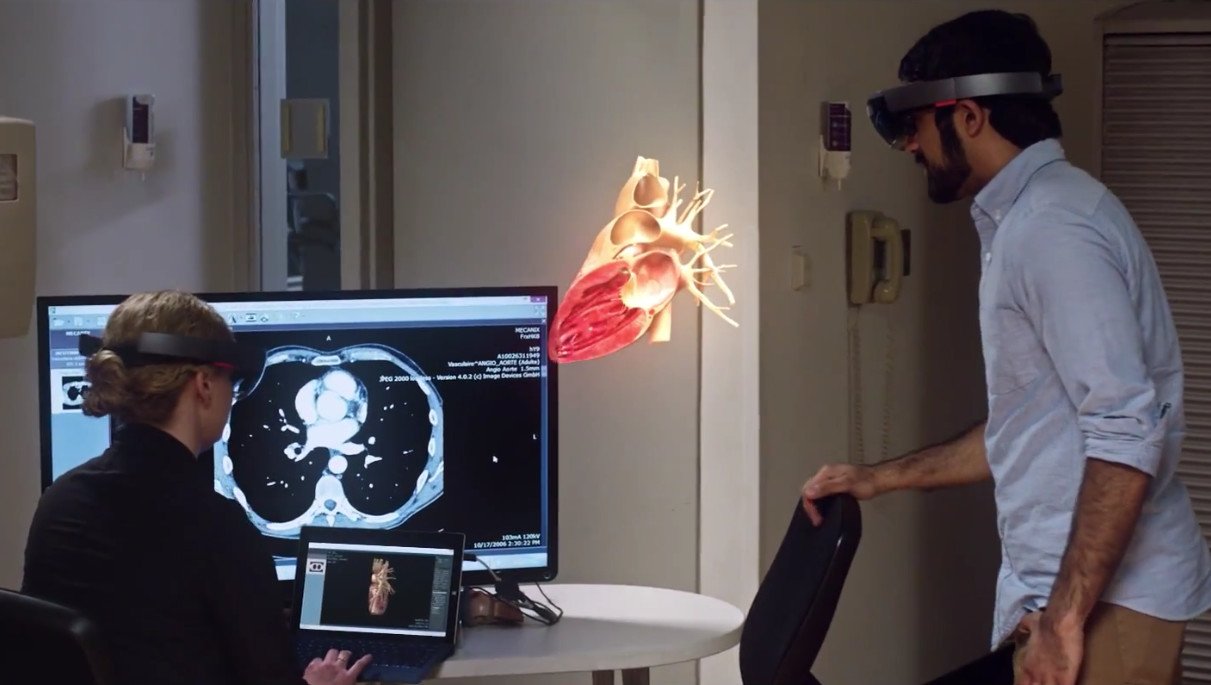

AI and AR in healthcare

In healthcare, patient information and vitals can be displayed in a nurses (who are highly mobile) field of view rather than just on a monitor at a nurses station.

AI-supported wearables could also make shift transitions smoother. Previous shift details could populate a nurses FOV as he dons the wearable. Or as doctors enter a patients room AI could surface all relevant information.

An AI system using facial recognition that has previously encountered and logged information about family members of a patient, could potentionaly surface that information for staff or doctors meeting those individuals for the first time. The applications are endless.

The AR and AI life

Smart wearables in everyday life could help us "recognize" strangers. AI could proactively cross-reference facial recognition data from Facebook, other social media platforms and public data making chance encounters less of a mystery. Imagine this tech on the faces of cops. Officers may one day have the ability to instruct AI to "tag" a person of interest resulting in the AI following that person (via public AI-driven cameras) and communicating surveillance information to the officer's headsets.

AI-driven camera systems could proactively communicate concerns to security guard headsets. This is much more efficient than depending solely on manning multiple monitors. Pilots could see what air traffic controllers see and vice-versa. The list goes on.

The future laid out here is admittedly speculative, but even if these scenarios don't play out the direction is clear. Microsoft and others are making AI an integrated part of their AR efforts.

Microsoft has demonstrated that AI can search and understand the physical world and act on what it sees. The company's long-term goal is likely an AI-enhanced holographic computing platform that allows users to continue interacting with the physical environment while AI, proactively and upon request, delivers insights based upon its perceptions. An AI-human-holographic computing synergy may be the future of computing.

What scenarios do you envision?

Jason L Ward is a columnist at Windows Central. He provides unique big picture analysis of the complex world of Microsoft. Jason takes the small clues and gives you an insightful big picture perspective through storytelling that you won't find *anywhere* else. Seriously, this dude thinks outside the box. Follow him on Twitter at @JLTechWord. He's doing the "write" thing!