Groundbreaking tech will make Cortana's hearing as good as ours

If you feel like Cortana just doesn't get you, improvements may be on the way.

We all want to know the person we're talking to is listening to us. We extend this expectation to our "non-human" AI companions as well. Having to repeat oneself, once we've mentally moved from "expression of thought" to "expectation of response" only to be volleyed back to "expression of thought" because the listener did't hear us is an exercise in frustration.

Talking to digital assistants can be frustrating.

This all-too-common pattern of exchange between humans and our AI digital assistants has caused many of us to default to more reliable "non-verbal" exchanges.

Ironically, speaking to our assistants is supposed to be a more natural and quicker way to get things done.

Perhaps science fiction has spoiled us. The ease with which Knight Rider's Michael Knight verbally interacted with his artificially intelligent Trans Am, KITT, (and other fictional AI-human interactions), painted a frictionless picture of verbal discourse. The bar has been set very high.

Microsoft may have gotten us a bit closer to that bar, and to a route out of the pattern of frustration talking to digital assistants often engenders.

Crystal clear

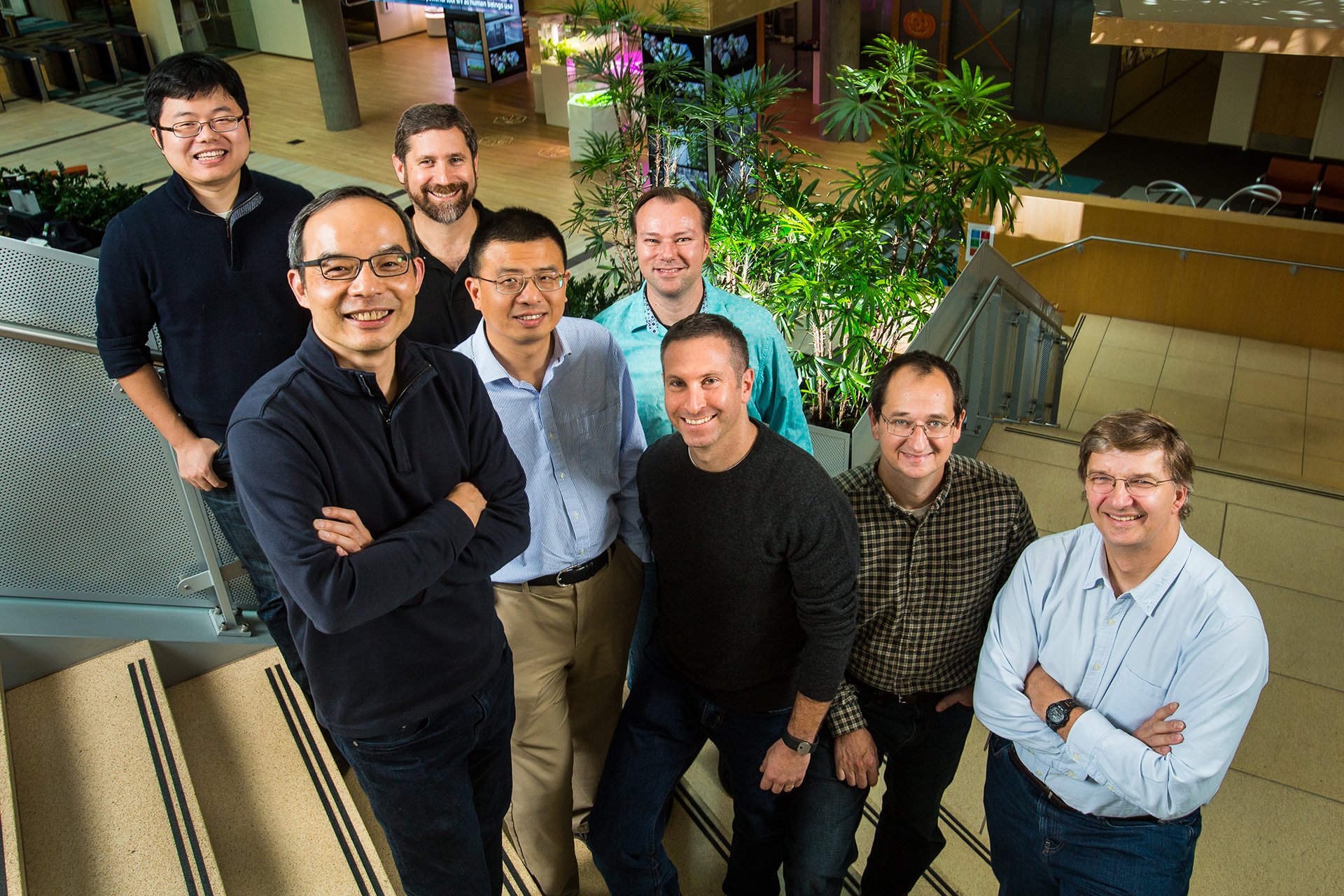

On Monday, October 17th, 2016 Microsoft announced that their latest automated system had reached human parity regarding speech recognition. In a nutshell, Microsoft's non-human system was just as accurate as professional human transcriptionists when hearing and transcribing conversational speech.

Microsoft's automated system performed as well as professional transcriptionists.

The tests included pairs of strangers discussing an assigned topic and open-ended conversations between family and friends. The automated system surpassed the respective 5.9%, and 11.3% word error rates of the professional transcriptionists for each test.

All the latest news, reviews, and guides for Windows and Xbox diehards.

Microsoft's executive vice-president of Artificial Intelligence and Research Group, Harry Shum, praised this accomplishment:

Even five years ago, I wouldn't have thought we could have achieved this. I just wouldn't have thought it would be possible.

This breakthrough, of course, is foundational to the realization of the more complex AI interactions we have come to believe are just around the corner. An assistant's ability to accurately hear us, like with human interactions, is a prerequisite to its understanding us. The journey to understanding is, of course, the next step. That may or may not take as long as our trek to human parity in "hearing" has taken us to achieve.

Competition is good

The road to human parity speech recognition began in the 1970's with a research division of the US Department of Defense, DARPA. Microsoft's subsequent decades-long investments led to artificial neural networks which are patterned after the biological neural networks of animals. The combination of convolutional and Long-Short-Term-Memory neural networks helped Redmond's system become "more human". Xuedong Huang, Microsoft's chief speech scientist exclaims:

This is an historic achievement.

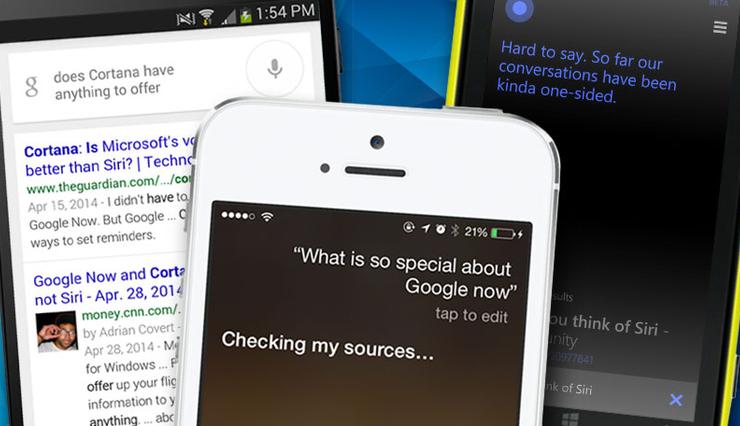

And, of course, it is. Microsoft is not alone in its efforts to evolve AI understanding of human language, however. Google and Apple have invested in neural networks as well. The boost to Siri's performance is likely attributable to Cupertino's investments. Furthermore, Google's access to a massive repository of data through its search engine and ubiquitous Android OS has helped Mountain View's voice-recognition efforts make tremendous strides.

Apple is striving to catch up to Microsoft and Google.

Google's and Microsoft's industry leading progress with deep neural networks, AI, natural language processing and machine learning have likely spurred Apple's most recent investments in Siri.

Cupertino is looking to make its bounded assistant more competitive if recent job postings for the Siri team in Cambridge UK are any indication:

Join Apple's Siri team…and be part of revolutionizing human-machine interaction!""You will be joining a highly talented team of software engineers and machine learning scientists to develop the next generation of Siri. We use world class tools and software engineering practices and push the boundaries of artificial intelligence with a single aim: make a real difference to the lives of the hundreds of millions of Siri users.

This will undoubtedly increase Siri's reliability over time. Still, these advantages will be limited to Siri users within Apple's walled garden of 11.7% of all smartphone users and around 10% of all PC users.

Canvasing the competition

In a world where digital experiences are transient, the unbounded (cross-platform) nature of Cortana and Google Now, is arguably an advantage these assistants have over Siri. Combined with the Bing and Google search engine backbones respectively, Microsoft's and Google's investments in conversation as a canvas position these companies beyond the "iPhone-focused" Apple.

Speech recognition is key to Microsofts Conversations as a Platform plan.

Microsoft's forward-thinking platform focus is the backdrop which supports its accomplishments in human parity in speech recognition. This achievement is important to Nadella's Conversation as a Platform and human language (written/verbal) as a UI strategy. It's a critical piece to a complex puzzle of making AI and human interaction more natural. Nadella had this to say about Conversation as a Platform:

We're in the very early days of this…It's a simple concept, yet it's very powerful in its impact. It is about taking the power of human language and applying it more pervasively to all of our computing. ...we need to infuse into our computers and computing intelligence, intelligence about us and our context…by doing so…this can have as profound an impact as the previous platform shifts have had, whether it be GUI, whether it be the Web or touch on mobile.

Cortana on PC, Edge, Windows Mobile, iOS, Android and Xbox is a big part of this vision. Shum shared of Redmond's speech recognition achievement and Microsoft's AI assistant:

This will make Cortana more powerful, making a truly intelligent assistant possible.

Going Forward

Customizing Cortana to each region, like Korea, before her launch there, provides a tailored regional experience but slows her global expansion. This is a sore spot for many users but is an essential part of Microsoft's personal digital assistant vision. Still, it is a disadvantage Cortana has in relation to the more widely distributed Siri and Google Now.

Finally, the lack of a standalone unit such as Alexa and Google Home is an apparent hole in Microsoft's strategic positioning of Cortana. Perhaps Redmond's groundbreaking success in speech recognition will give such a future a device a strategic advantage over the competition. Fans are asking, I wonder if Microsoft can hear them.

Jason L Ward is a Former Columnist at Windows Central. He provided a unique big picture analysis of the complex world of Microsoft. Jason takes the small clues and gives you an insightful big picture perspective through storytelling that you won't find *anywhere* else. Seriously, this dude thinks outside the box. Follow him on Twitter at @JLTechWord. He's doing the "write" thing!