It's not uncommon for an abundance of leaks and rumors to drop while leading into a major event like CES 2025, and that's exactly what happened regarding AMD's RDNA 4 graphics cards.

Everything from naming schemes to potential designs were popping up, and many rumors ultimately became fact when I received AMD's CES press package regarding its new Radeon GPUs for 2025. All major publications received the same Radeon info ahead of AMD's presentation, and we were all left scratching our heads when there was no on-stage mention of RDNA 4.

What happened on AMD's side, and why am I rethinking my decision to upgrade to NVIDIA's upcoming RTX 5070?

Why did AMD pull its RDNA 4 GPU presentation at the last second?

Tom's Hardware's Paul Alcorn spoke with AMD execs David McAfee and Frank Azor following the company's CES presentation. McAfee gave assurances that RDNA 4 is on track in terms of development and expectations, blaming the lack of live coverage on the CES presentation's 45-minute limit. Azor also noted that AMD wanted to see what NVIDIA had to demonstrate at its CES keynote, which turned out to be RTX 5000 GPUs from the "Blackwell" architecture.

I watched NVIDIA's keynote speech live, and when it was announced that the $549 RTX 5070 could match the power of a far more expensive RTX 4090, I earmarked the upcoming GPU as the next piece of hardware going into my gaming PC.

As the hype from NVIDIA's presentation died down, it became clear that a lot of PC enthusiasts weren't too excited about NVIDIA's performance claims. Sure, the RTX 5070 is an improvement over the previous "Ada" generation, but the whole "as powerful as an RTX 4090" leans heavily into AI upscaling and the new DLSS 4.

The RTX 5070 is far from matching the RTX 4090 in terms of raw hardware power, plus it has half of the VRAM at 12GB (though it is GDDR7 compared to GDDR6X). NVIDIA does claim that its upgrade to DLSS 4 will lower VRAM demands, but by how much remains to be seen. Considering the RTX 4060 with 8GB of VRAM gets walloped by new games like Indiana Jones and the Great Circle, having as much VRAM as possible in your GPU doesn't seem like a bad idea as we wade into 2025.

Get the Windows Central Newsletter

All the latest news, reviews, and guides for Windows and Xbox diehards.

Now that we're on the other side of CES 2025, more new leaks and rumors involving AMD's next-gen RDNA 4 GPUs have been popping up. If AMD can follow through when it officially announces Radeon RX 9000-series cards, I might be keeping my all-AMD PC intact.

The official RDNA 4 news from AMD

As I wrote in a post highlighting the announcement of the Ryzen 9 9950X3D and RDNA 4 GPUs, AMD made its Radeon RX 9070 XT and RX 9070 GPUs official in a CES press release. The company noted that the new RDNA 4 architecture offers improvements for compute unit optimization, IPC and clock frequency, AI compute architecture, and media encode/decode engines.

The 4nm RDNA 4 architecture has second-gen AI accelerators, third-gen ray tracing accelerators, and a second-gen Radiance Display engine. Those new AI accelerators seem to be a big key here, as AMD also revealed its new FidelityFX Super Resolution 4 (FSR 4) upscaling technology.

Rather than continuing to rely on spatial upscaling algorithms a la FSR 3, the new FSR 4 makes the change to machine learning just like NVIDIA's DLSS. From the press release, AMD says it's made "dramatic improvements in terms of performance and quality compared to prior generations."

Hardware Unboxed created a great video from the CES 2025 showroom detailing the differences between FSR 3.1 and FSR 4. With the assumption that the demo was running two prototype versions of the flagship Radeon RX 9070 XT, it quickly becomes clear that AMD isn't bluffing with its performance claims.

FSR 3 is notoriously bad at providing small but high-quality details, especially in fast-moving scenes. Those difficulties become more pronounced as you raise the screen's resolution. The AMD demo, however, seems to have alleviated many of those issues. Even background details are more pronounced and clear when using FSR 4. This demo provided by Hardware Unboxed is very limited, but it's promising.

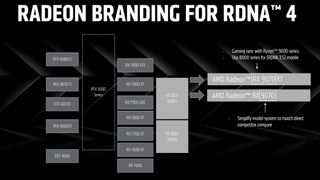

Aside from a slide explaining why AMD chose to switch up its GPU naming convention — an effort to align the latest GPUs with the latest Ryzen CPUs and NVIDIA's own branding — that's about all the official information we have from AMD.

Post-CES leaks have me reconsidering my RTX 5070 decision

From my perspective, AMD's last-minute pull of its RDNA 4 announcement is smart. It knew that NVIDIA would be stealing a lot of the GPU news space with its RTX 5000 announcement, and I'd wager that AMD has gained some extra coverage with its RDNA 4 no-show.

My personal gaming PC recently received a new Ryzen 7 9800X3D processor (along with a new motherboard and memory), and the Radeon RX 6800 GPU inside is now next on the list of upgrades.

I had it in mind that I'd be switching to NVIDIA for this generation, mostly on the back of DLSS and the fact that AMD really didn't have anything to compete at the level of performance I want, but that continues to change as new leaks and rumors come out in the wake of CES.

One of the most recent leaks, reported by Videocardz, pegs the RX 9070 XT near the same level as the RTX 4070 Ti SUPER in Cyberpunk 2077 and Black Myth: Wukong. More interesting is the fact that the leaks cover FHD, QHD, and 4K resolutions, as well as these games being optimized for NVIDIA.

In another leak reported by Videocardz, someone on the Chiphell forum posted a screenshot of what is assumedly the RX 9070 XT, sporting 16GB of GDDR6 VRAM and a 329W TGP.

Considering I plan on using the Ryzen 7 9800X3D for years to come, dipping again into a full AMD build — with additional benefits coming from AMD's Infinity Cache tech — keeps getting more appealing. Now I just have to wait and see what the new RDNA 4 GPUs will cost before I make a final decision.

If you would like to attempt to get an RTX 5000 GPU on launch day, check out my guide on where to buy RTX 5090 and RTX 5080. It has a bunch of retailers and stocked brands to consider.

Cale Hunt brings to Windows Central more than eight years of experience writing about laptops, PCs, accessories, games, and beyond. If it runs Windows or in some way complements the hardware, there’s a good chance he knows about it, has written about it, or is already busy testing it.

-

GraniteStateColin A lot of AMD fans have pointed out their past graphics cards' comparatively strong raster performance and given a pass on the weak ray tracing as less important to gaming. That's fair, but I think it misses a big-picture point: when game developers can assume users have sufficient graphical power to handle full path tracing, they can stop going through all the effort to pre-bake lighting. That's a massive effort in game dev that can be redirected to actual game development when they know the gamer's system can handle the lighting automatically.Reply

For this reason, I'm very excited that AMD has focused on boosting its ray tracing performance with RDNA 4. For me, that's the most important area for graphics cards these days. When some future gen Xbox and PlayStation can do the full path tracing mode of Cyberpunk 2077 and all the new games of the time, that will meaningfully change game development and we all win. And with AMD graphics chips powering the consoles, this is a critical move.

I'm not certain what level of graphics card is needed for this (others here are more expert on that than I), but my sense is that it's about the RTX 4070. So we need to get that level of tech down to near entry level pricing for gaming PCs and included in the consoles. If the AMD 9070 cards are priced competitively (and if MS and Sony are using that in the next gen console), this could be a huge moment in gaming. I expect the RTX 5060 will also handle this sufficiently at a more entry level price point.

Even for users who think they don't care about the aesthetic improvements gained by ray tracing or path tracing to lighting, you should care about its mass adoption for the impact to game development. -

Russianjesus What's with every single tech reviewer/journalist just pretending the new neural texture compression in the 50 series just doesn't exist? According to the information Nvidia gave the 12gb on the 5070 would be as powerful as 16 or even 18gb in an AMD cardReply