Buying into early access for PC games is a lottery I want no part of

I nearly bought Starfield's upgraded edition, and I'd have wanted my money back.

It's been a big year for PC gaming. So many high-profile, epic titles have come our way; it really is a great time to be a gamer. But it's also an incredibly frustrating time, it feels. (I swear, I'm not out here just to rant.)

There's an issue rearing its head during the grand release of Starfield: games asking you to pay good money for first dibs ahead of their global launch, only to find they're a bit of a mess. Not to be confused with actual early access titles, of course, where you buy in knowing that you're playing a game still in development.

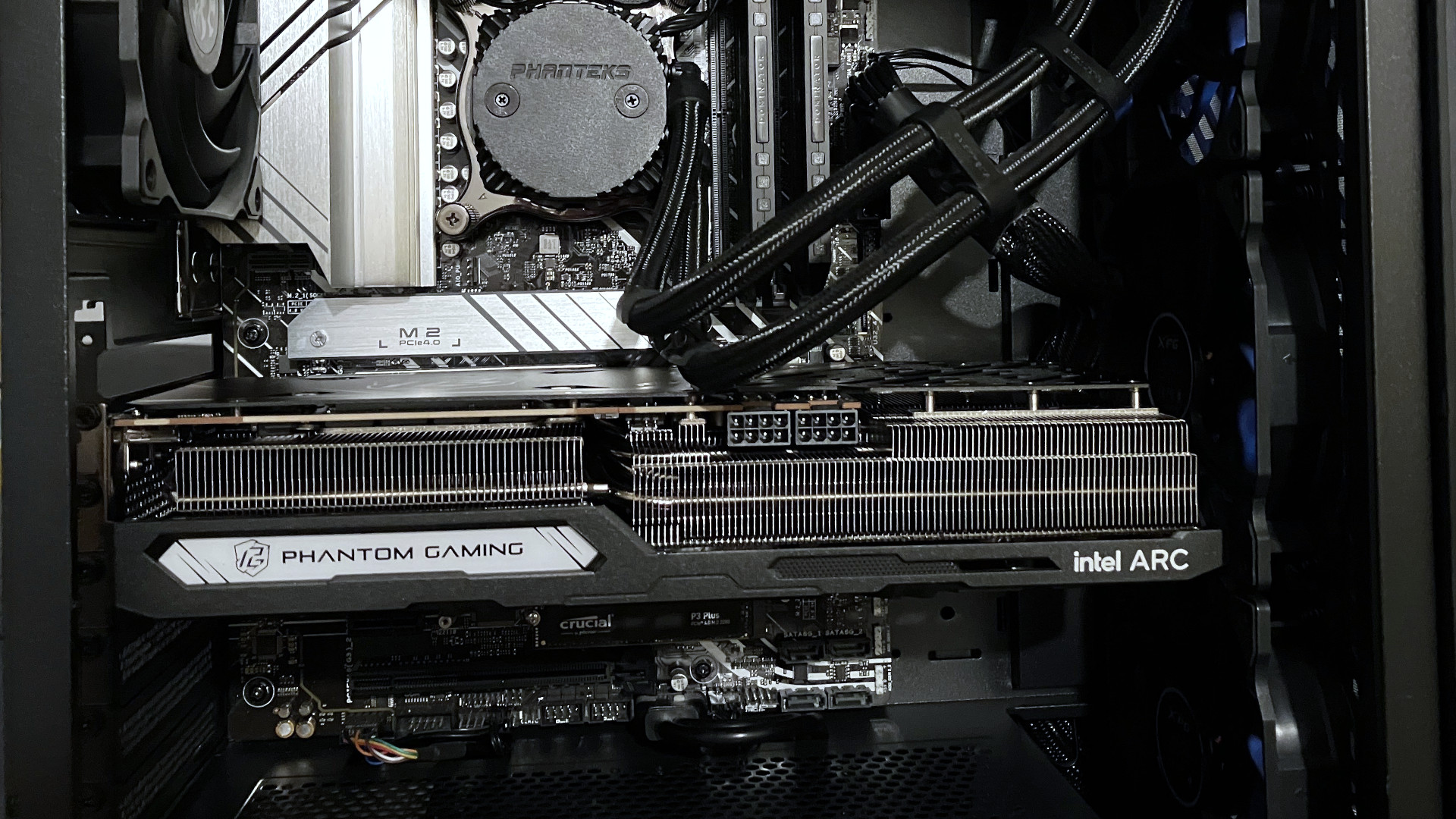

I was so close to springing for the Starfield premium upgrade to use alongside PC Game Pass to play early. I started getting a bad case of FOMO, but I held off, and I'm glad I did. Because I wouldn't have had a good time at all. Part of that is because I'm an Intel Arc user, but this whole 'ship and fix it later' trend has been building for long enough. I, for one, will not be parting with cash to play games early anymore.

So what was wrong with Starfield?

Starfield is, by Bethesda standards, well-polished. There are bugs, some hilarious, but on the whole, it seems to have been a success. That is unless you have an Intel Arc graphics card inside your desktop PC, which I do. For those people, admittedly a minority, Starfield was an unplayable hot mess.

If you're paying $100 for a game, with much of that extra cost behind playing early, you should at least be able to play it. Some were irritated by Bethesda's omission of NVIDIA DLSS upscaling tech, possibly due to dollars exchanging hands with AMD, but at least those people could still play the game.

If you had an Intel Arc graphics card, you had to suck it up or get a refund. Fair play to Intel, with the engineers there working over the holiday weekend to get some fixes out ASAP, but again, this is post-launch, after folks have parted with money.

Imagine the uproar if Starfield had been so broken on NVIDIA GPUs. It would have been a much bigger story, but the point remains: whichever hardware you choose, you expect to be able to play the games you're buying. Why should it be a lottery? For $100, you want your game ready and playable.

Get the Windows Central Newsletter

All the latest news, reviews, and guides for Windows and Xbox diehards.

Quality control is suffering somewhere

I'm not a game developer, and I truly do appreciate this stuff is hard. The scope of modern AAA games is incredible, and we're lucky to be able to play some of these experiences. But the implied process employed to test these things seems to be falling short at the very least.

Take my own experience recently with Remnant 2. I had to pass the review to a colleague because it was completely broken and unplayable on my PC. The review notes even acknowledged an issue with the XeSS upscaling tech, something which came to light as practically required for smooth gameplay. But, again, it's an issue present in an early access period for a premium-priced game.

Lest we also forget The Last of Us Part 1 PC port? What a horrendous mess that was.

I can't be the only one going into new game launches not only excited to start playing... but wondering if I'll be able to really play it at all. If you're lucky enough to own an RTX 4090 or some equally brutal GPU, you'll likely be fine, but you probably don't. As we know from, you know, data, mostly cheaper and older graphics cards are way more popular.

I don't know the process, but I know it needs to be improved. There are now three graphics providers and a wide range of hardware combinations these games need to be playable on. Sometimes, we get lucky, and games aren't pushed until they're ready; other times, not so much. But the people making the games and those making the hardware that we need to play these games have to become better at working together.

Granted, it would likely be easier without executives pushing for releases as soon as humanly possible, but a lot needs to come together to make a PC game work well. And increasingly, it isn't.

If you bought a TV and the picture looked like crap when you fired it up for the first time, you would return it. I don't get why it's OK to ship games that are flat-out broken for some people who are spending a lot of their money on these things. Promising to fix it later is not OK.

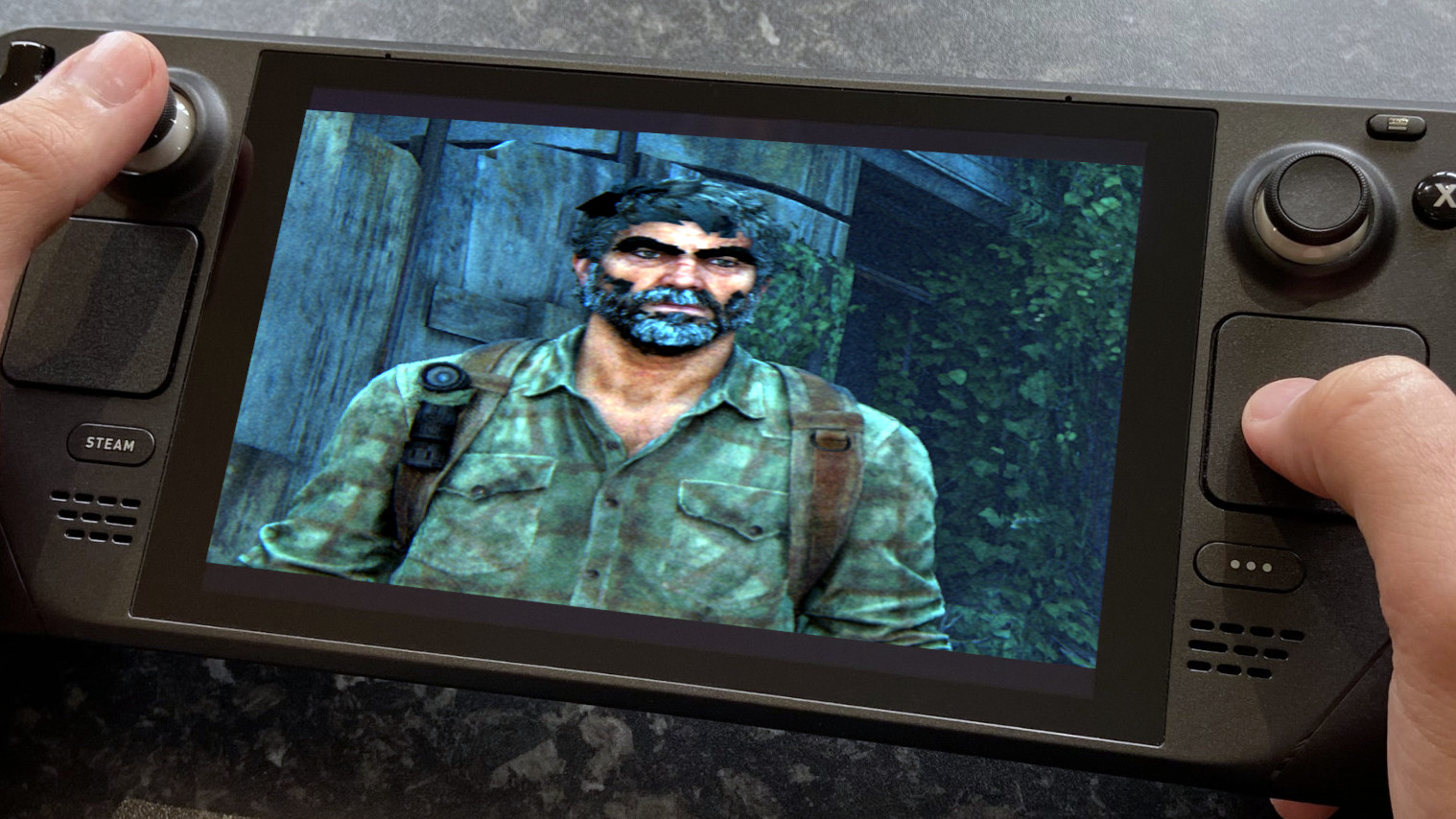

No more early access PC, more console gaming

My interest in PC gaming was rekindled from the minute I got my Steam Deck, but it's being tested. At least on the Steam Deck, you know the limitations and that there is an element of potluck as to how well a game will play. But it's also an ancillary device.

The increasing chances of having a rough time at the launch of a PC game, be that early access or even day one, is making me think twice about taking the plunge early. There are plenty of examples where everything has been hunky-dory right out of the gate, but it still feels increasingly like gambling to me.

At least when firing up a game on my Xbox Series X in the earliest days, it usually seems to work. The console experience as a whole is just less frustrating when you want to just play a game.

Maybe that's where I need to refocus my playing time.

Richard Devine is a Managing Editor at Windows Central with over a decade of experience. A former Project Manager and long-term tech addict, he joined Mobile Nations in 2011 and has been found on Android Central and iMore as well as Windows Central. Currently, you'll find him steering the site's coverage of all manner of PC hardware and reviews. Find him on Mastodon at mstdn.social/@richdevine