"When you use the model, you save enormous amounts of energy" — NVIDIA CEO says they can't do graphics anymore without AI

In a recent interview, Jensen Huang outlined just how important AI is now for PC gaming, and it makes a lot of sense.

What you need to know

- In a recent interview, NVIDIA CEO, Jensen Huang, has been talking up AI in regard to his company's products.

- Simply put, Huang says NVIDIA can no longer do graphics without using AI.

- By using AI models in tools like DLSS, the process is both faster and more energy efficient.

AI is everywhere, whether you're a fan of it or not, and in some cases it's even in places you might have been using and taking for granted. Like in your PC gaming rig.

I've written in the past about how I hope that AI could lead to positive developments in gaming, like bringing the movie Free Guy to reality, but there's a very different angle, too.

NVIDIA CEO Jensen Huang has in a recent interview (via PC Gamer) been talking about such uses of AI, and honestly, I hadn't considered some of this stuff. I'm probably not alone, either.

One section that really stands out is this one:

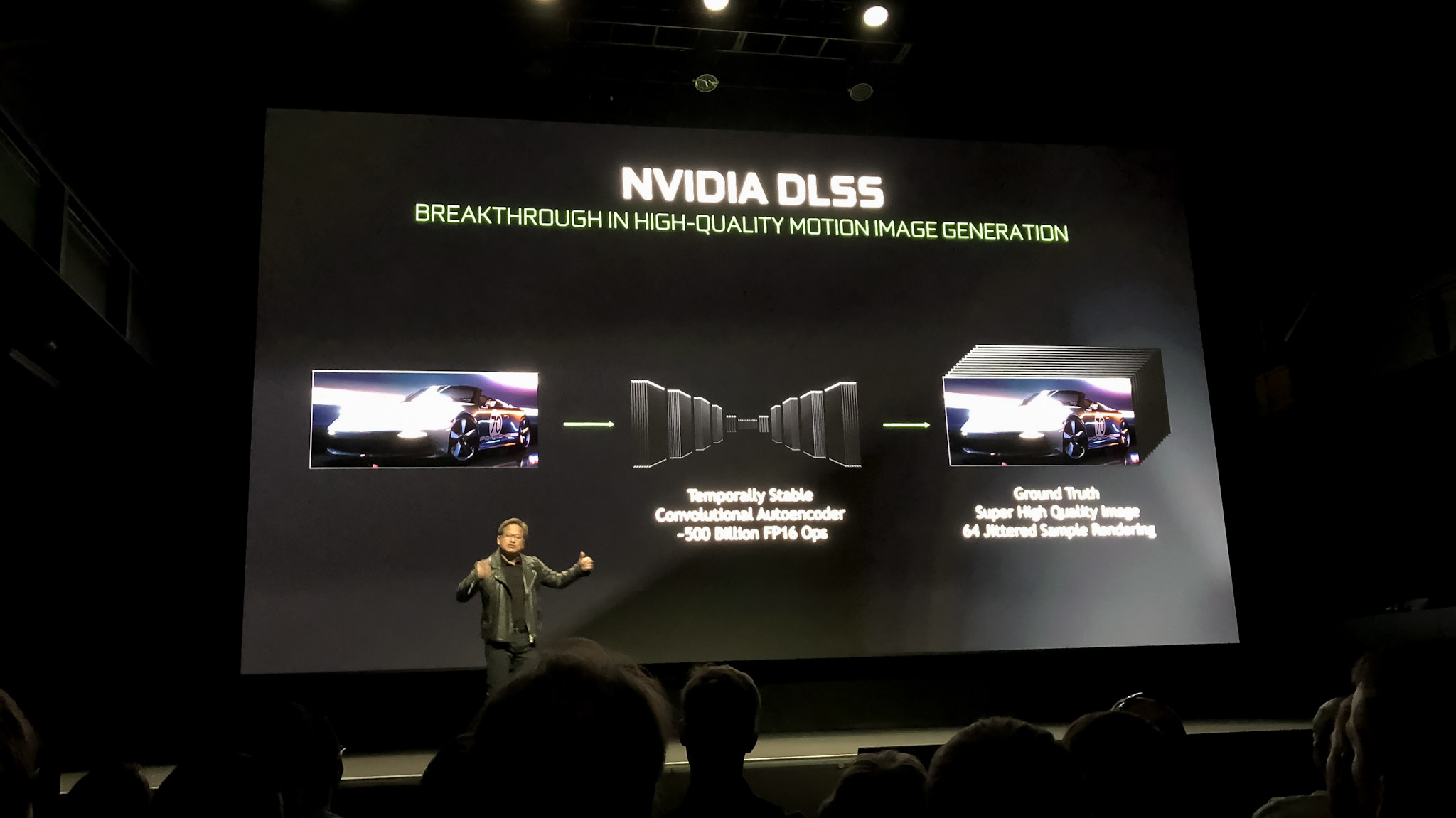

We can't do computer graphics anymore without artificial intelligence. We compute one pixel, we infer the other 32. I mean, it's incredible. And so we hallucinate, if you will, the other 32, and it looks temporally stable, it looks photorealistic, and the image quality is incredible, the performance is incredible, the amount of energy we save -- computing one pixel takes a lot of energy. That's computation. Inferencing the other 32 takes very little energy, and you can do it incredibly fast.

Jensen Huang, NVIDIA CEO

AI uses insane amounts of power, but we haven't considered the opposite

There has been much talk of the biblical amount of energy consumed by the likes of OpenAI in training and executing its AI models. It's generally accepted that this stuff isn't great for the planet without a sustainable way of powering it.

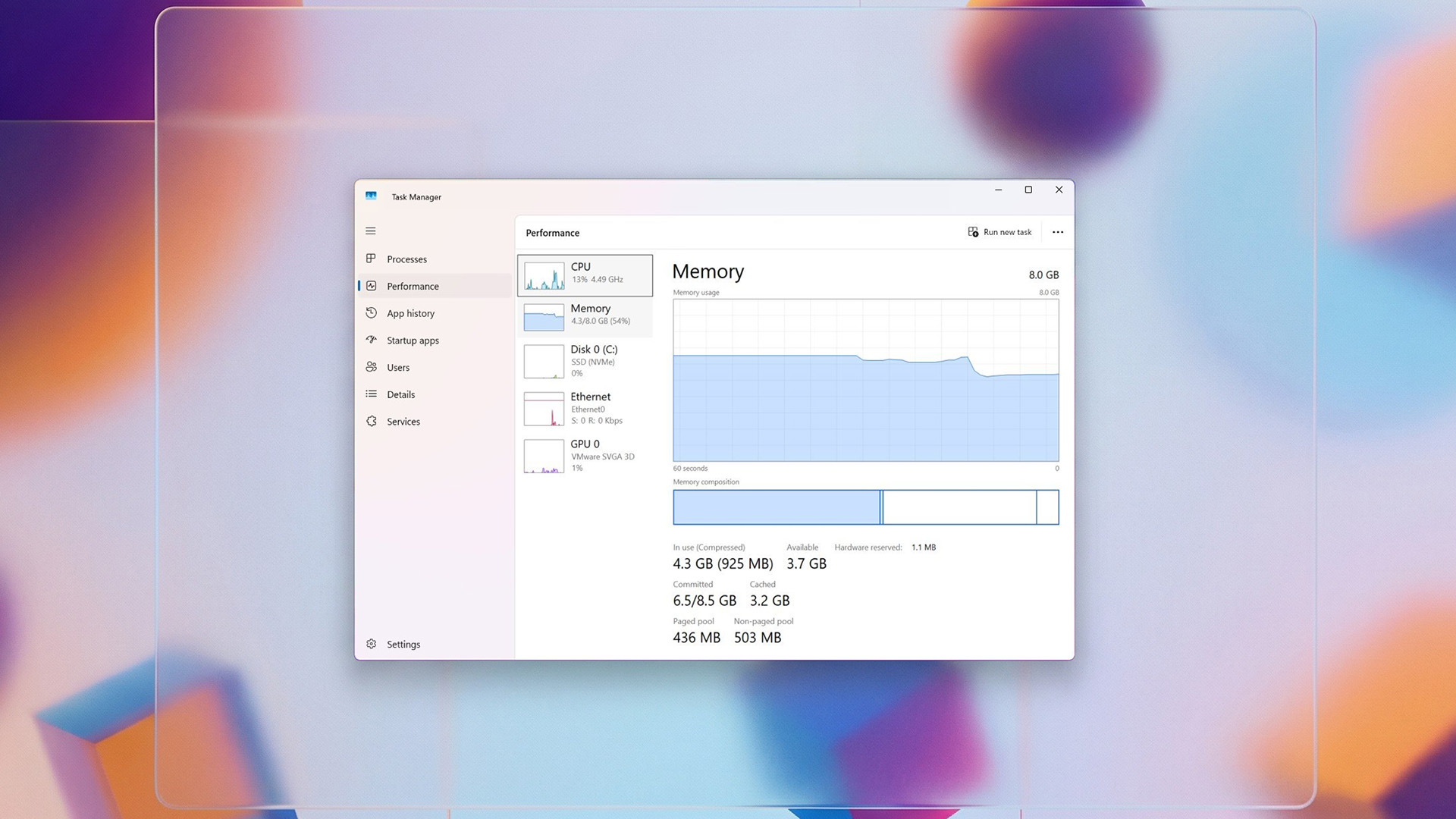

Today's graphics cards are also thirsty beasts. I'll never forget opening my RTX 4090 review sample and audibly gasping at the adapter cable that came with it that had four 8-pin power connectors on it. Granted, this is the best of the best, still, but it's capable of sucking up a crazy amount of energy.

What never occurred to me is how AI and DLSS can actually mitigate how much energy is being consumed while generating the glorious visuals in today's hottest games. DLSS is easily the best implementation of upscaling, and its biggest competition, AMD's FSR, still uses a compute-based solution versus AI.

All the latest news, reviews, and guides for Windows and Xbox diehards.

NVIDIA's GPUs have incredible AI processing power, as has become evident when compared to the NPUs on the new breed of Copilot+ PCs. It's both interesting and a little shocking that the CEO of the biggest player in the game has outright said that it's no longer possible to ignore using AI in this way.

Even if you're not a fan of generative AI or its infusion in search, art, or a multitude of other purposes, if you're a gamer, there's a good chance you're already a fan. Whenever the RTX 5000 series finally surfaces, it looks like its AI chops are going to be more important than ever before.

🎒The best Back to School deals📝

- 🕹️Xbox Game Pass Ultimate (3-months) | $29.79 at CDKeys (Save $20!)

- 💻HP Envy 16 2-in-1 (Ryzen 5) | $499.99 at Best Buy (Save $350!)

- 🕹️Starfield Premium Upgrade (Xbox & PC) | $27.69 at CDKeys (Save $7!)

- 💻ASUS Vivobook S 15 (X Elite) | $945 at Amazon (Save $355!)

- 🕹️God of War: Ragnarök (PC, Steam) | $51.69 at CDKeys (Save $14!)

- 💻Lenovo ThinkPad X1 Carbon | $1,481.48 at Lenovo (Save $1,368!)

- 🎮 Seagate Xbox Series X|S Card (2TB) | $249.99 at Best Buy (Save $110!)

- 🕹️Hi-Fi RUSH (PC, Steam) | $8.89 at CDKeys (Save $21!)

- 💻HP Victus 15.6 (RTX 4050) | $599 at Walmart (Save $380!)

- 🖱️Razer Basilisk V3 Wired Mouse | $44.99 at Best Buy (Save $25!)

- 🖥️Lenovo ThinkStation P3 (Core i5 vPro) | $879.00 at Lenovo (Save $880!)

Richard Devine is a Managing Editor at Windows Central with over a decade of experience. A former Project Manager and long-term tech addict, he joined Mobile Nations in 2011 and has been found on Android Central and iMore as well as Windows Central. Currently, you'll find him steering the site's coverage of all manner of PC hardware and reviews. Find him on Mastodon at mstdn.social/@richdevine